Introduction

During a scene in Oliver Stone’s Wall Street film sequel, titled Wall Street: Money Never Sleeps,1 the young trader Jake Moore asks investment bank CEO Bretton James a question: “What’s your number? The amount of money you would need to be able to walk away from it all and just live happily-ever-after. See, I find that everybody has a number and it’s usually an exact number, so what is yours?” The CEO pauses for effect and delivers a one-word response: “More.”

In this scene, from a film prophetically begun before the 2008 worldwide financial meltdown, the mogul displays a fundamental trait of capitalist culture —the need for “constant, endless growth.”2 More money. More products. More profit. And now, with so much of our interaction occurring online: more friends, more “likes”, and more items shared.

The most popular network space for this social interaction is Facebook, the world’s largest online social network. The site has more than 1.3 billion active monthly users,3 and is the second most visited website in the United States.4 Facebook functions as a prime example of what Mirko Tobias Schäfer calls “bastard culture,” a participatory culture industry where “the interactions between users and corporations, and the connectivity between markets and media practices are inherently intertwined.”5 A large portion of these interactions on Facebook is the more than 3 billion likes and comments its users post every day. 6

A significant component of Facebook’s interface is its revealed enumerations of these “likes,” comments, and more. Such metrics of social interaction not only present themselves throughout the site, but in many cases stand in for the textual content they represent. A list of friends who like a status becomes “8 people like this.” The comments added to a photo become “View all 5 comments.” These metrics and others represent a large-scale, software-driven quantification of our social activity online.

What are the effects of this enumeration, of these metrics that count our social interactions? In other words, how are the designs of Facebook leading us to act, and to interact in certain ways and not in others? For example, would we add as many friends if we weren’t constantly confronted with how many we have? Would we “like” as many ads if we weren’t told how many others liked them before us? Would we comment on others’ statuses as often if we weren’t told how many friends responded to each comment?

In this paper, I question the effects of metrics from three angles. First I examine how our need for personal worth, within the confines of capitalism, transforms into an insatiable “desire for more.” Second, with this desire in mind, I analyze the metric components of Facebook’s interface using a software studies methodology, exploring how these numbers function and how they act upon the site’s users. Finally, I discuss my software, born from my research-based artistic practice, called Facebook Demetricator (2012-present). Facebook Demetricator removes all metrics from the Facebook interface, inviting the site’s users to try the system without the numbers and to see how that removal changes their experience. With this free web browser extension, I aim to disrupt the prescribed sociality produced through metrics, enabling a social media culture less dependent on quantification.

Capitalism, Human Needs, and the Desire for More

Analyzing how metrics are working across the Facebook interface requires examining what I call our “desire for more.” When faced with a number, why do we want that number to go higher? Why is more—more friends, more “likes,” more shares—better than less? Why aren’t we satisfied with stability in the face of quantification?

I would argue that the answer lies at the relationship between our evolutionarily developed human needs and the pervasiveness of capitalism within western society. If, as Marx and Engels have said, capital has equated personal worth with exchange value, then its reverse should hold true:7

exchange value = personal worth

Personal worth, as adapted and developed through evolution, is an essential human need. It falls high up on Maslow’s hierarchy of needs as esteem—the need for self-respect, self-confidence, etc.8 Within Edward Deci’s Self Determination Theory, personal worth functions as an intrinsic motivator, helping to satisfy our innate need for relatedness, or our need to be valued by and connected with others.9

This psychological need for personal worth now contends with what Mark Fisher calls capitalist realism, “the widespread sense that not only is capitalism the only viable political and economic system, but also that it is now impossible even to imagine a coherent alternative to it.”10 Capitalism “subsumes and consumes all previous history,” and its “system of equivalence” equates all cultural objects—even those critical of the system11—with a monetary value.12 Free trade has thus become the “single, unconscionable freedom”13 we’re left to exercise in our interactions with others.

Our need for personal worth is highly dependent on these social interactions, as both relatedness and esteem are necessarily measured in relation to others. If this essential human need can only be fulfilled within the confines of capitalism, then it stands to reason that we are subject to a deeply ingrained desire for more: a state of being where more exchange, more value, or more trade equals more personal worth. In other words, our evolutionarily developed desire for worth is an intrinsic need, which translates, through the “pervasive atmosphere”14 of capitalist realism, into a desire for more.

Business Ontology, Audit Culture, and the Role of Quantification

So if our need for personal worth manifests as a desire for more, how do we know when we have it? What are the epistemologies at play? And how are the mechanisms of capital driving, and informing, this desire?

Capitalism is “always about growth.”15 This “growth fetish,”16 as Fisher refers to it, serves the needs of capital in multiple ways. First, growth enables capitalism’s survival, as the system literally cannot exist without constant expansion. This is evident in capitalism’s need for continuous additions of enumerable items, such as jobs or assets. Perhaps less obviously but more importantly, we see this in the business ontology that pervades modern society, driving our tendency to consider everything within business terms.

This business ontology has led to the spread of audit culture, or the increasing application of observational measurement—well beyond its original use in financial management—to previously self-governed public sector institutions. As Shore and Wright argue, a primary example of this can be found in the modern university, where the audit is presented as a tool to improve teaching quality and student outcomes, but instead erects a “neo-liberal” political technology that improves little while centralizing power away from faculty and towards administration.17

The primary mechanism that enables both business ontology and audit culture is quantification—the reduction and enumeration of “things and energies and practices and perceptions into uniform parts.”18 Quantification “intrudes into every kind of natural system scientists care to investigate,” as there are “always things to count.”19 It is used by individuals and States to claim an objective separation between facts and politics,20 and, as a science of measurement, was partially driven by the expansion of capitalism.21 Quantification facilitates the evaluation of capital’s progress and the measurement of capital’s growth in numerical terms. The audit employs quantification as its way of understanding progress and tracking compliance.

Thus, within our system of capital, quantification becomes the way we evaluate whether our desire for more is being fulfilled. If our numbers are rising, our desire is met; if not, it remains unmet. Personal worth becomes synonymous with quantity. Further, through strategies like the audit, the pursuit of capital establishes a desire to impress others through quantification that also plays into Bourdieu’s ideas of symbolic and social capital. We want to “win” the confidence of our friends, to accumulate a capital of “social connections, honourability and respectability” that can be exchanged later within our social system.22 As we will see later on, when combined with metric visibility in Facebook, our desire for social capital leads us to internalize the need to excel in a quantified manner.

Analysis and Practice as Ways of Understanding Facebook Quantification

In the case of social networking sites like Facebook, the vehicle that facilitates and presents these quantifications is software. In this context, I define software as a human-designed structure for computation. Software consists of both the interface that users see, read, and manipulate, as well as the underlying algorithms23 and data structures24 that configure and store the data that the interface (re)presents. If we are to understand the effects of software, and the ways in which its components act upon its users, we must consider the political, cultural, and social implications of the system. Who does a particular system work for, how does it change us, and what does it make possible?

This last point is one that Matthew Fuller explores in his introduction to “Software Studies \ A Lexicon,” when he refers to software’s balance between potential and limit as “conditions of possibility.”25 Despite a common belief that network and computational systems are neutral actors, enabling human communication and creativity, these systems enact a series of constraints on those who use them,26 directing their actions, limiting their options, and overall, constructing them as users.27

In the case of Facebook, the company states that its mission is to “make the world more open and connected.”28 Given that software is Facebook’s primary asset—its deliverable product—we must examine both code and interface to understand the efficacy of this claim. Is Facebook’s interface design solely working to enable social connection, or does it work in other ways? Are the site’s data structures simply holding information in a convenient format, or does the design of those structures create other effects?

Though software studies is growing rapidly as a field, now with its own journal29 and book series30, there have only been a handful of examinations about Facebook from a software studies perspective. Carolin Gerlitz and Anne Helmond looked at Facebook’s use of social “like” buttons across the web as both metrics and trackers within a larger “Like economy.”31 Robert Gehl used the concept of “reverse engineering” to elicit the political desires of those who built Facebook.32 Both myself and Gehl have analyzed how Facebook’s reduction of users to data sets homogenizes identity and fuels Facebook’s success over competitors.33,34 Taina Bucher has explored how social networks like Facebook “program sociality” and enact “algorithmic power.”35,36 And within the related area of platform studies, various scholars have examined how Facebook as a platform embodies the concepts of life and death,37 facilitates “networked publics”,38 and combines private, public, and corporate interests into a new form of sociality.39 There have also been a few artistic projects that utilize or question metrics, such as those from Petra Cortright,40 Ian Bogost,41 Anthony Antonellis,42 and others43. However, nothing to date has combined both a theoretical and a research-based art practice approach to examine the role of visible metrics across the Facebook interface. It is here that I hope to contribute by examining how Facebook metrics direct and prescribe user (inter)action, and by modifying the interface itself to remove the metrics as a way of both understanding and attenuating their effects.

Revealed Metrics in the Facebook Interface

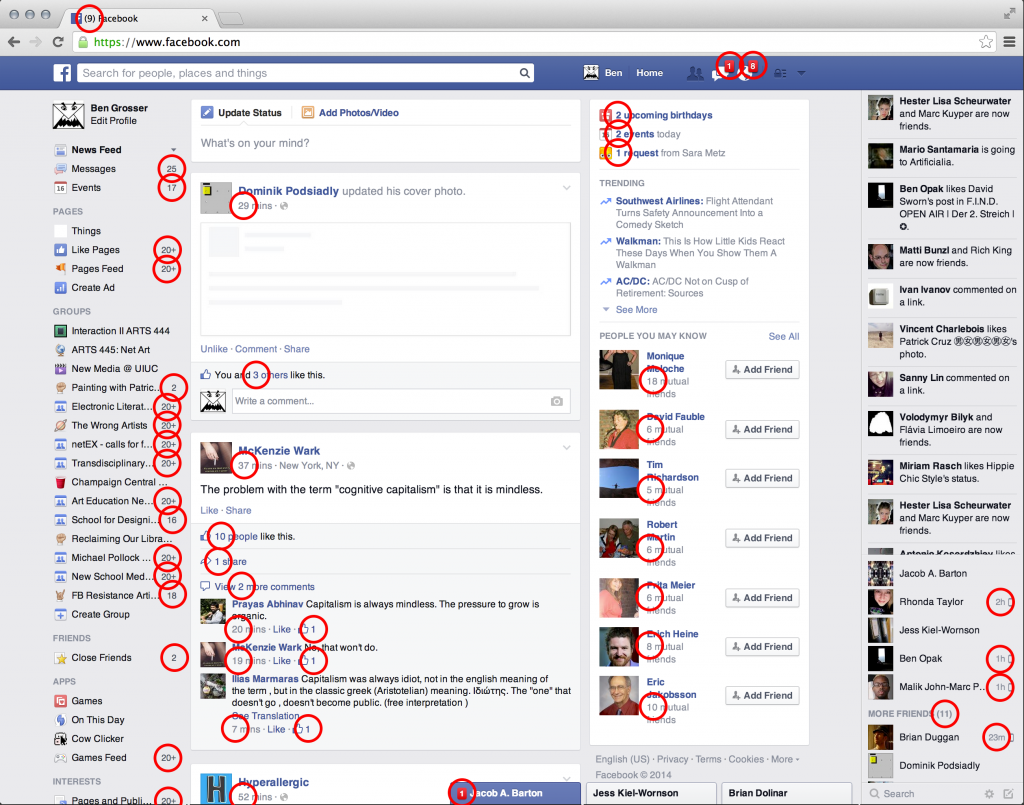

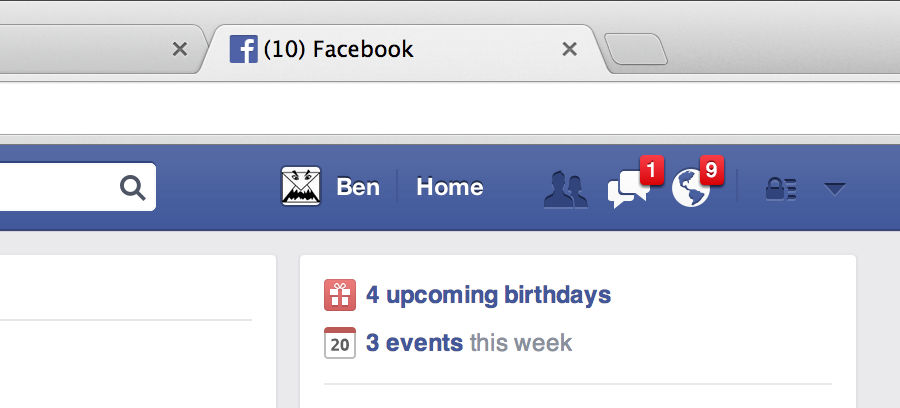

Within the context of Facebook, I define “metrics” as enumerations of data categories or groups that are easily obtained via typical database operations, and that represent a measurement of that data. These metrics are everywhere within the Facebook interface (fig. 1). They are the numbers, counting “likes,” comments, shares, friends, mutual friends, pending notifications, events, friend requests, messages waiting, chats waiting, photos, places, and much more. Every page within the vast Facebook infrastructure contains at least one, and the most-used pages such as the news feed and timeline, are littered with them.

These types of quantifications are fast becoming a staple of interface design across the Web. Other popular applications that have their own sets of metrics include the thread and message counts in Gmail, the follower, following, and tweet counts in Twitter, and the views, thumbs up, and thumbs down counts on YouTube.

However, Facebook is the largest site that depends so heavily on metrics that count social interaction. This is likely because, as a corporation, Facebook’s survival depends on its ability to sell targeted advertising, and those targets are built from the metrics they collect.44 Not all metrics collected by Facebook are visible in the interface, however. This raises the question of what specific metrics Facebook reveals to its users and which ones it keeps to itself. How do these hidden and revealed metrics differ, and what drives the company’s decisions to show or conceal data?

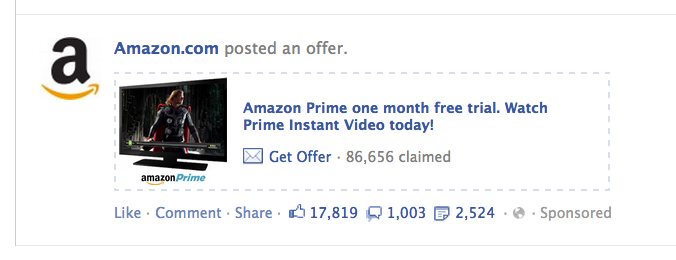

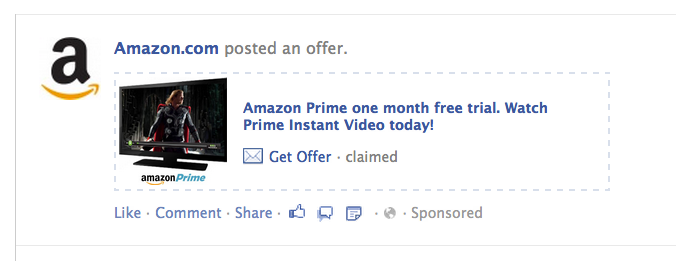

I would argue that Facebook’s primary criterion for making such decisions is whether a particular metric will increase or decrease user participation. Those who press “like” and increment their counters are “more engaged, active and connected than the average Facebook user.”45 As Gerlitz and Helmond found, the “like” button “seek[s] to set a chain of interaction in motion.”46 Engagement with a “like” represents not just a single action, but future potential engagement with a variety of content.47 In other words, interaction with the “like” leads to more (future) participation. Similarly, when looking across all metrics on Facebook, are users more likely to click on an advertisement if they only see its title or if that title is accompanied by a message indicating that 17,819 other people “liked” it before them (fig. 2)? I contend that Facebook shows the metric when the latter is true.

As I noted above, many metrics aren’t shown. For example, users aren’t told how many things they “like” per hour, how many ads they click each day, or how effective the “People You May Know” box is at getting them to add more friends to their network. These types of analytics are certainly a significant element within the system, guiding personalization algorithms, informing ad selection choices, etc. Would showing these types of metrics to the user make them more or less likely to participate? Similarly to the above, I contend that when the answer is less, Facebook hides the metric.

I would also speculate that a few of these decisions are not as well considered as one might expect. A relational database, such as the one used by Facebook, is built from lists48 of lists of data, which are computationally easy to enumerate. In other words, the data structure underlying Facebook lends itself to metrication. On occasion, this may lead the Facebook interface programmer to include a metric simply because they can.

The Desire for More Meets the Metricated Social Self

So, if the database structure underlying Facebook lends itself to metrication, how does metrication affect our social sense of self? I have argued above that capitalist realism generates within us a desire for more. How does this play out in a system of social interaction that foregrounds these metrics?

When a user first joins Facebook, he or she starts with zero friends. At this point the site is what Robert Gehl refers to as a “dehumanized network,” a site that appears as a “lifeless shell” without user-generated content.49 The primary consequence of this is an empty news feed. Without any connections the feed is inactive, and there is nothing to see. When the user adds one new friend or “likes” one new Page, the feed comes to life—but only at a trickle. When another is added its output increases.50 From there, the more friends/Pages a user adds or likes, the more active the feed becomes. In other words, this feed, which is the primary spectacle of Facebook, is only usable and/or useful with a significant friend network driving it.51 Its design teaches the user that more is necessary.

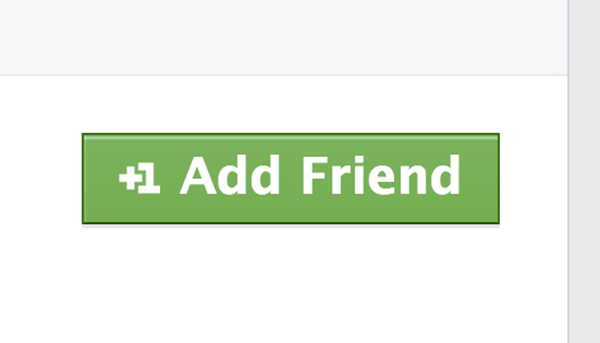

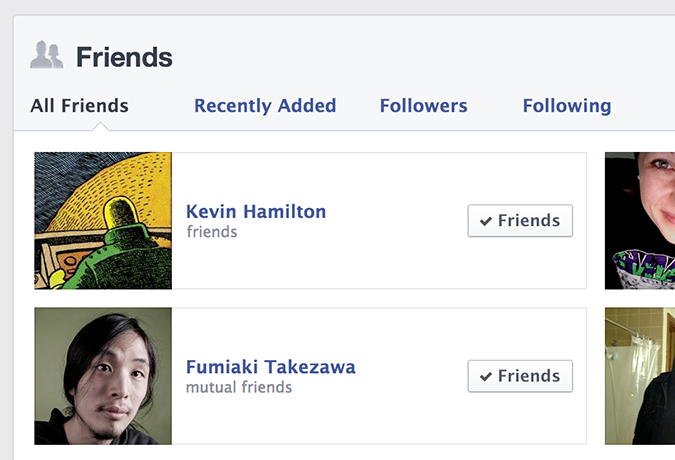

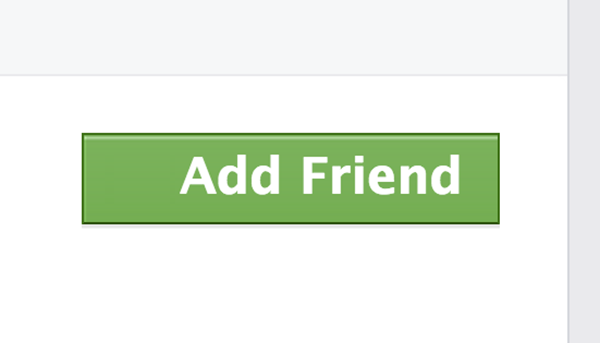

When users examine their profiles, they find that the system shows them how many friends they have (fig. 3). At the same time, every visit to someone else’s profile reveals how many friends that person has. These publicly viewable friend metrics play into our desire for more. When we are constantly being told how many friends we have, we are encouraged to add another, to make that number go higher, to exceed our current metrics. This is further reinforced by the interface, which places a “+1” in front of the “Add Friend” button (fig. 4). The system makes it appear as if adding a friend increments one’s social capital by one.

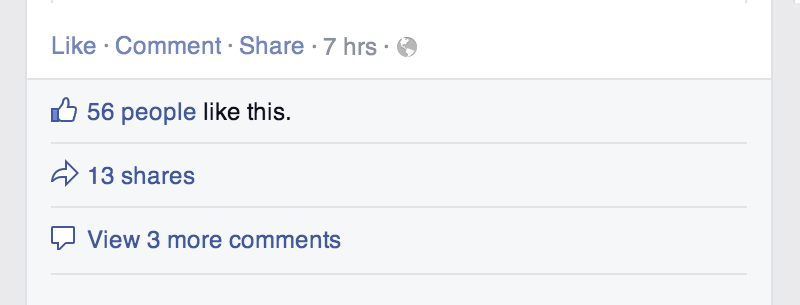

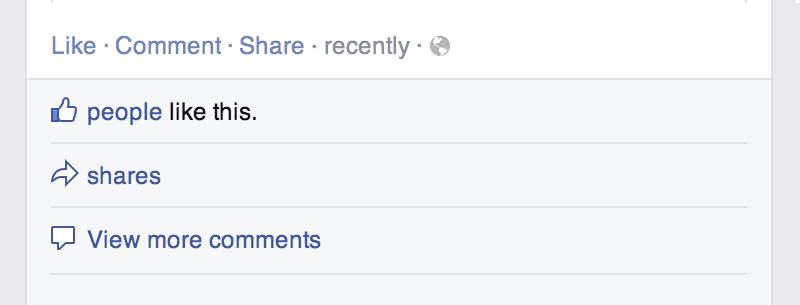

While the news feed teaches users to keep adding friends, the “like” button most heavily activates the desire for more. Clicking “like” is the central expression of agency on Facebook, the site’s signature feature and its most visible symbol. Facebook users “like” a friend’s “status,” “like” a “Page” for their favorite band, or “like” an ad for their local pizza place. The site tallies these “likes,” aggregates the “likers,” and foregrounds the resulting quantification. This happens everywhere: underneath every post on the news feed, aside every photo, and under every ad in the right-hand column (fig. 5).

The ever-increasing list of friends leads the “like” to function as an instrument of social capital. A larger network of connections enables a larger audience for “likes,” and the “likes” themselves serve as indicators of connection. More “likes” suggest more popularity. Less “likes” suggest something that didn’t connect, that didn’t warrant this essential action.

The “like” also functions as a form of symbolic capital, as a unit of trade in the recognition and prestige within one’s social group.52 This is because Facebook is becoming a new space of social visibility at a time when our ability to display more traditional markers of success (cars, houses, etc.) is under attack by everything from health care costs to public sector defunding. Facebook appears to be a more equalizing space, a place where an accumulation of likes becomes an “ideal weapon in [the] strategies of distinction,”53 a method of separation that distinguishes the popular from the unpopular. Specifically, this accumulation comes in the form of likes received and likes given. Likes given demonstrate our taste and culture to others, while likes received suggest that our statements and collections are worthy of recognition. When this need for esteem intersects with the desire for more, the accumulation of social and symbolic capital becomes the primary objective of the metricated social self.

The Auditing of Social Relations and the Graphopticon

Over the last few decades, we have become increasingly subject to mechanisms of measurement, a constant push to assess performance from a quantitative perspective. This culture of audit54 begins—most obviously, at least—within the public school system, where standardized tests are used to evaluate teaching effectiveness, to measure student performance, and ostensibly, to provide data useful for improving the system. These audits continue through college, and the practice eventually “leaks out and begins to organize all walks of life.”55 Others have explored the ways in which this audit culture not only fails to improve what it purports to care about, but also how it erects a neo-liberal political technology that results in a form of panoptic self-surveillance, an internalized need to excel within the audit’s parameters.56

Within the context of the metricated social self on Facebook, however, this culture of audit plays out a bit differently. It is no longer a form of panoptic self-surveillance where the few watch the many, as in Bentham’s prison design,57 or the many watching the few as in Mathiesen’s synopticon.58,59 Instead today’s social network-based culture of audit is more similar to what Nathan Jurgenson refers to as “the many watching the many,”60 an “omniopticon” where everyone watches everyone else that arose with the emergence of “Web 2.0”61 technologies. When this form of “social surveillance”62 combines with audit culture in a metricated Web 2.0 environment, it creates a new new mode of surveillance I call the graphopticon.

The graphopticon is a form of self-induced audit within social networks like Facebook where the many watch the metrics of the many, where the social graph combines with the omniopticon to create the potential of being audited all the time. The social graph is the database mechanism used by Facebook that stores and structures digitally mediated relationships between the site’s users,63 thus enabling those within the graph to watch everyone else within the graph. However, the graph isn’t simply facilitating social surveillance, because everyone’s view of everyone else is always accompanied by each user’s social metrics. Given the relationships between these metrics and the prestige, esteem, and various forms of capital I described earlier, this graphoptic potential manifests as an internalized need to excel in metric terms—to exceed in whatever areas are easily seen and, most importantly, measured by others (e.g. “likes,” friends, and all other metric presentations of self within Facebook). While one Facebook user can’t know if their friend count is being observed at any one moment, they know that any number of users could be looking at any moment. Their Timeline is open to all of their friends, and in many cases to all of the world. Therefore, users need to maintain a high-performing metric presentation at all times, complete with as many friends as they can make, or as many likes as they can accrue, if they are to appear to be doing well whenever they might be observed.

This need for high metric performance is further influenced by the workings of Facebook’s News Feed algorithm.64 The more a user interacts with likes, comments, and other similar objects throughout the site, the more the algorithm favors that user’s posts and thus shows them on the news feeds of their friends.65 More interaction leads to more visibility, while less interaction can leave a user unseen by others. As Bucher argues, this “algorithmic power” results in a reversal of the Panopticon, where a threat of constant visibility is changed into a threat of constant invisibility.66 This panoptic reversal thus strengthens the graphopticon, as visibility is a prerequisite for obtaining the likes, shares, and friends necessary for high metric performance.

The database as a mechanism of observation is particularly suited to facilitate and promote the graphopticon. As Fuller and Goffey note, “the very ease with which one can implement an audit trail within a database—and, with minimal technological extension, in the mundane software-based tools of everyday life, in social networks, for example—fuels the proliferation of audit and establishes the conditions for its extension to more and more kinds of social relation.”67 Facebook’s foregrounded metrication of social self is such an extension (as is its inward, unrevealed metrication), subjecting its users to a continuous, virtual, and audited “keeping up with the Joneses” 68,69 in a unique mixture of metric consumption and symbolic capital. Thus users understand their online lives within a market narrative. The database enables their management and monitoring of that narrative, while the “audit favors as virtuous those who assimilate themselves to its requirements.”70 Within Facebook, the audit’s primary requirement is more.

Metricated Time and a Preference for the New

Before analyzing the broad effects of Facebook metrics, I want to examine one final metric: the timestamp. Timestamps are the hyperspecific, time-relative age counts that appear underneath every item on the news feed and on a number of other items across the site (photos, timeline entries, notes, etc.). A simple notation of time, in and of itself, wouldn’t qualify as a metric at all within my definition. Facebook timestamps aren’t simply notations of time—they are constantly updating enumerations of age in relation to the present.

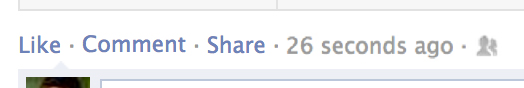

Timestamp presentations adhere to distinct language patterns. At their most specific, they mark seconds (e.g. “26 seconds ago”) (fig. 6), and then move to minutes (e.g. “1 minute ago”, or “3 minutes ago”). Older timestamps shift into more general verbal descriptions (e.g. “about an hour ago”), until finally marking hours, and eventually days. By relentlessly reminding us of the age of a post relative to the present, timestamps create a false sense of urgency. I really don’t need to know that my friend’s meme post went live “23 seconds ago” rather than “49 seconds ago”, or that my colleague ate her banana “23 minutes ago” rather than “30 minutes ago”. But these constant enumerations of age present the news feed as a running conversation that you can’t miss—if you leave for even a second, something important might pass you by.

Timestamps also reveal both an engineered and an ideological preference for the new. This preference is engineered in the sense that Facebook has crafted an interface mechanism that foregrounds age metrics as a way of promoting the new over the old. As a result, scrolling a typical news feed to see posts that are even just one day old (let alone two or three) can take quite a while. Further, because of the way Facebook designed that feed, the more one explores the old, the slower it gets.71 This discourages exploration of the past while encouraging continuous consumption of the present. The preference is ideological in the sense that this mechanism applies to everything: messages, news, profiles, notes, photos, music, events, etc., appear in reverse chronological order. In other words, a focus on the present is built into everything within Facebook.

The composition of timestamp language directly illustrates Facebook’s predilection for the present. As I discussed earlier, timestamps increasingly shift from specific to general the older they get. For example, instead of reporting an item’s post time as “1 hour 20 minutes ago,” Facebook reports “about an hour ago.” “3 hours, 39 minutes, 18 seconds ago” becomes “3 hours ago.” This shift is algorithmic, a presentation of age through code that intentionally deviates from times stored in the database. The older a post gets, the vaguer its timestamp becomes. Facebook isn’t storing time as “about an hour ago,” so why hide the specific while presenting the general? Generalized age language suggests older items don’t warrant specificity. The periodicity of timestamp updates also reveals Facebook’s intentions with time. “8 hours ago” requires an interface update only once per hour instead of the once per second required of items posted within the last minute. The slower a timestamp updates, the less attention it draws to itself and the less resources it appears to utilize, while newer items change frequently and draw more attention as a result. Timestamp language represents an engineering of ideology, an algorithmic realization of a preference for the new.

Douglas Rushkoff refers to this ideology as presentism, a preference for the now at the expense of the future.72 In the face of this preference, we “end up reacting to the ever-present assault of simultaneous impulses and commands.”73 Robert Gehl sees Facebook’s encouragement of users to “focus on the new and the immediate” as a new mode of computing called “affective processing.”74 Both presentism and affective processing configure us into a reactionary mode, and when combined with the desire for more, compels us to focus on the news feed. The user watches and waits for the next thing to appear, and this encourages active production of contributions to the conversation. Given that Facebook’s value is entirely dependent on how much its users participate and contribute to its databases, this false sense of urgency has high utility for the system. Without user content there would be no Facebook to monetize.

How Metrics Guide User Behavior, or What Do Metrics Want?

Theodore Porter, in his study of quantification titled Trust in Numbers, calls quantification a “technology of distance”75 that “minimizes the need for initmate knowledge and personal trust.”76 Enumeration is impersonal77, suggests objectivity, and in the case of social quantification, abstracts individuality.78 As previously demonstrated, the world’s foremost social network, a system that binds one billion of us together within networked space, is highly dependent on the quantity. How does this technology of distance—a structure designed for impersonal communication—affect social interaction within Facebook? What are the motivations behind these effects, both by the designers of the system and by the system itself? How are metrics guiding user behavior?

To answer these questions I want to start by thinking about how Facebook numbers act. Alfred Gell’s theories of the agency of artworks can be useful here. He sees artworks “as the outcome, and/or the instrument of, social agency.”79 Agency is a “culturally-prescribed framework for thinking about causation, when what happens is (in some vague sense) supposed to be intended in advance by some person-agent or thing-agent.”80 Similarly, it is useful to think about Facebook metrics as having built-in intentions. The “like,” for example, can be seen as an assemblage of the intentions of the user who liked something, the programmers that enabled that like to occur, the future engagement that “like” might produce, and the system that facilitates the quantification of likes in the first place.81 In other words, Facebook quantifications have social agency. Given this, what do these metrics want?

The primary thing that Facebook metrics want is increased user engagement with the site. In the case of Facebook the corporation, this desire is not surprising. The value of Facebook is dependent on the breadth of its data, and more—more users, more “likes,” more photos, more friends, etc.—is the key to increasing this value. Facebook metrics act to increase user engagement.

But what do these metrics want for themselves, if this is even possible? Materialist theory questions the premise that humans hold exclusivity on agency. Instead, we live “within a natural environment whose material forces themselves manifest certain agentic capacities,” where “materiality is always more than ‘mere’ matter,” forcing us to reconsider “the location and nature of capacities for agency.” 82 Jane Bennett refers to this material as “vibrant matter,” or “the capacity of things—edibles, commodities, storms, metals—… to act as quasi agents or forces with trajectories, propensities, or tendencies of their own.”83 Bruno Latour’s Actor-Network Theory theorizes both human and non-human entities as “actors,” where “any thing that does modify a state of affairs” has agency.84

So within this framing of non-human agency, what does a metric want? What is a metric’s need, or its tendencies? I would argue that metrics are no less immune to the desire for more than we are—that, as an instrument of capital, the metric is just as susceptible to capital’s growth fetish. Metrics want to “pile up,”85 to be larger, to grow higher. Within Facebook, the best way for them to do this is to encourage more use of the site by Facebook’s users.

Facebook metrics employ four primary strategies to affect an increase in user engagement: competition, emotional manipulation, reaction, and homogenization.

I discussed the roots of competition earlier: namely the growth fetish of capitalist realism and the way it drives us to get more “likes” or friends than our peers.

Metrics also manipulate Facebook’s users emotionally. For example, the graphopticon wants us to watch each other, to induce a need to excel quantitatively in the face of our friends. At the same time the metrics also create a more general state of anxiety, as we wait for more “likes,” as we look for more quantitative evidence of acknowledgement from others, and as everything gets old right before our eyes. We are left with a need to escape that anxiety,86 and the easy way out is the metric more.

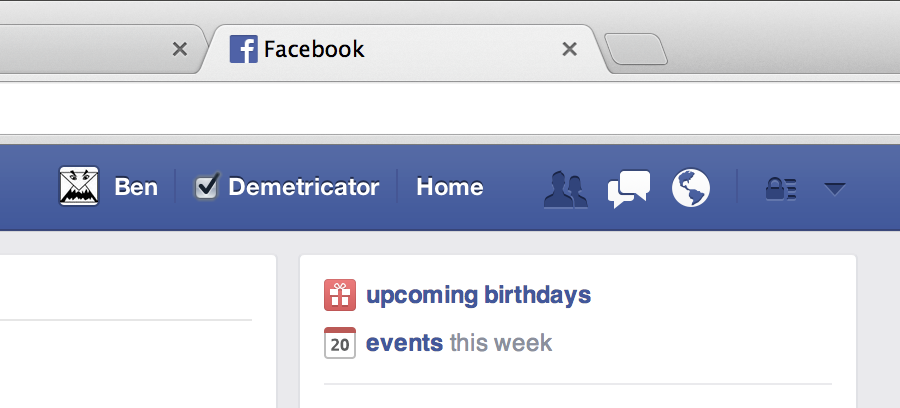

Mark Fisher paraphrases Spinoza to argue that, “far from being an aberrant condition, addiction is the standard state for human beings, who are habitually enslaved into reactive and repetitive behaviors.”87 Metrics play on this habit by developing a state of reactive tendencies in Facebook users. A primary focal point for these tendencies is the block of red and white notification metrics in the site’s main navigation bar (fig. 7). These numbers enumerate new friend requests, messages, “liked” posts, and other similar actions. Upon login, users check their notification metrics to see how much attention they received while they were away. During a session, users repeatedly examine these icons, looking for reactions to the content they’ve posted. In both cases, the presence of a red and white number suggests metric success.

The design of these notification metrics sets up an endless interaction loop that draws on the user’s desire for more to compel the consistent generation of content for Facebook. Users see the notification and click the number to read it. That click causes the metric to vanish. The quickest way to get new notifications is to generate additional content that attracts attention. “Liking” a post, adding a friend, or leaving a comment all create a potential for new metric rewards. The more content a user generates, the higher the potential.

This interaction loop of checking, reading, and generating resembles compulsive behavior in the way that it sets up a cycle that can never be satisfied. The user’s desire for more craves higher notification metrics, but the system’s design clears those metrics every time the user checks them. In the case of notifications, metrics want more attention from the site’s users.

Finally, metrics homogenize Facebook’s users.88 Quantification, as a technology of distance, drives us toward enumeration as the primary quality distinguishing users from one another (and thus distracting users away from more individualistic markers). Quantification does this through aggregation. Individual thoughts and ideas are aggregated into a single number. Dissimilar things are made equivalent.89 Whole collections of friends are reduced into one or a few values.90 Thousands, or even millions of individuals get lumped together because they “liked” the same product. “Like” metrics themselves combine disparate user actions and intentions into a single value.91 In these ways, the metric becomes a simulacrum, in the Baudrillardian sense,92 replacing the human, their emotions, and their events. These aggregations homogenize identity and limit the way one’s individuality can be represented within the system. With any system that reduces difference, it is important to ask whether the less privileged become most subsumed by quantification, be it women, minorities, LGBT, or the poor.93 This homogenization likely preferences the majority while it also serves Facebook’s algorithms for ad targeting by constructing deceptively simple profiles of user interest. 94 In this case the metrics want less individualized distinctions in order to facilitate more aggregation.

Through these strategies, metrics construct Facebook’s users as homogenized records in a database, as deceptively similar individuals that engage in making numbers go higher, as users that are emotionally manipulated into certain behaviors, and, perhaps more importantly, as subjects that develop reactive and compulsive behaviors in response to these conditions. In the process, these metrics start to prescribe certain kinds of social interactions.

The ways that Facebook metrics prescribe interaction are most easily understood through examples.

When users are constantly told how many friends they have, and how many friends their friends have, how does that change their desire to add friends? Most are encouraged to add more friends, but even for those who actively and/or periodically trim their friend list, they’re still working in reaction to friend metrication. The metric drives their actions.

When users are shown how many “likes” their status gets, how does their reaction start to guide what they write in the first place? Certain kinds of posts get more likes than others, and the desire for more thus begins to guide what users write and submit. In other words, when users want more likes, they write statuses that get them and post photos that accrue them. Quantification is changing the quality of what users do.

When users are writing posts to get more “likes,” how does this change the amount of things they post? More posts have the potential for more likes, so the metrics drive users to share more than they would have otherwise, to create more opportunities for metric excellence.

When users see everyone else’s “likes,” how does this knowledge affect their propensity to give a like? The metrics interact with our need for reciprocal altruism here, often driving users to like others’ posts in the hopes that the others will like theirs in return.

These examples illustrate my argument that metrics employ strategies to push us into “pre-existing flows”95—wanting more, “liking” more, and sharing more—all in the service of increased user engagement. And through its quantifications of social interaction, Facebook thus becomes a technology of control that pushes for continuous consumption within its system of metrics. More is the thing that metrics want.

Facebook Demetricator and the Easing of Prescribed Sociality

So, if Facebook, through its metrics, prescribes certain kinds of social interactions, what would a social network without quantifications be like? How would the absence of metrics change our interactions within networked space? As an artist, one of the ways I ask questions like these is to intervene within a system in order to examine how that system changes who we are and what we do.96 Toward that end, I created a work I call Facebook Demetricator.

Facebook Demetricator (Demetricator) is a free and open source web browser extension that removes all metrics from the Facebook interface.97 Friend counts disappear. “Like” quantifications vanish. Shares are no longer enumerated.

For example, if the text under someone’s status says “You and 19 other people like this” Demetricator will change it to “You and other people like this” (fig. 8). Under an ad, “23,413 people like this” becomes “people like this” (fig. 9). The “+1” on an “Add Friend” button vanishes. “8 mutual friends” becomes “mutual friends” (fig. 10). The user can still click on a link and count up their mutual friends (or likes, or comments, etc.) if they care about understanding them as an aggregate value, but under the influence of Demetricator that foregrounded quantification is no longer visible. These removals happen everywhere, including the news feed, the profile, the events page, in notification icons, and within pop-ups. Users can toggle the demetrication using a prominent control in the menu bar (fig. 11), turning it on or off when desired, but its default state is on (metrics hidden).

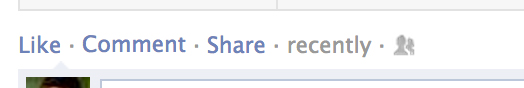

Even the continuous enumerations of age, via timestamps, are modified (fig. 12). However, rather than hiding them, Demetricator recontextualizes them. As mentioned earlier, users really don’t need the precision that timestamps broadcast, whether something happened “12 minutes ago” or “13 minutes ago”, for example. So, in response, Demetricator converts all timestamps into one of two categories. Those that enumerate an age within the last two days become “recently”. Any timestamp older than that becomes “a while ago”. These two categories cover all time scales.

With Demetricator, the focus is no longer on how many “likes” a user received, how much someone likes their status, or on how old a post is, but on who liked it and what they said. Quantity is no longer foregrounded, leaving users to focus on the content that remains. Adding a friend is no longer labeled as “+1” (fig. 13). Users must decide for themselves whether a comment, photo, or advertisement is of interest without knowing how many others liked it first. Through its changes to the interface, Demetricator invites Facebook’s users to try the system without the numbers, to see how the absence of metrics changes their experience.

To make this possible, the Demetricator software runs within the web browser, constantly watching Facebook when it is loaded and removing the metrics wherever they occur. This is true not only of those counts that show up on the user’s first visit, but also of anything that gets dynamically inserted into the interface over time. The demetrication is not a brute-force removal of all numbers within the site, but is instead a targeted operation that focuses on only those places where Facebook has chosen to reveal a count from their database.98 Thus, numbers a user writes into their status, start/end time listings for an event, and other numbers not functioning as metrics are left untouched.

Demetricator has been in use by thousands worldwide since it was released in October of 2012. The project accrued significant press and blog attention,99 and as a result, a plethora of online comments. In addition, a large number of users of the software have written me personal emails sharing feedback about their use of the project. These comments are instructive for understanding how users navigate the metricated social space of Facebook.

Some who commented on web articles questioned the usefulness of Demetricator. One commenter on The Verge writes: “But… that’s the whole reason for Facebook. To ring up the Experience Points.”100 Another on Gizmodo wrote: “It’s useful to strive to maximise [sic] numerical success. It’s how SATs and many careers work.”101 On her own blog, Jena Fanelli wrote in a post titled “Sucking the fun out of Facebook,” “My thoughts are that this just takes away the point of Facebook while limiting stalking….thoughts?”102

The majority of feedback I’ve received, however, has been direct email communication illustrating how users have embraced Demetricator. On the project’s website I posed questions to users about the ways Demetricator has changed their experience of Facebook.103 Here is a sample of responses:

“[Facebook is] so much more enjoyable without constant (subconscious) pressure to compare when numbers are involved.”104

“Now it doesn’t matter to me how many likes something has. My opinion is based on the quality of the post and its discussion. It’s not a competition! I enjoy it so much more.”105

“It’s now to the point where our emotional well-being is partly determined by the numbers we get on Facebook.”106

“Facebook … has become a massive number glut, so much so that the notifications become like meth, you just can’t stop checking and rechecking. It simply became a nervous addiction for me, inadvertently. But, that all changed when I downloaded the add-on. No more ridiculous liking stats, no more mounds of notifications or the number of comments on other’s statuses. It added a Zen element to the entire format, and I finally feel at ease. It takes out that crucial element that makes Facebook addictive to myself and so many other people.”107

“My flocking to [Facebook’s] little red notification number is [akin] to a mouse pressing a lever for heroin.”108

These users are having a different experience with Facebook when the numbers are absent. As a result, they start asking questions about how the metrics are changing things. Several commenters even address some of the strategies I described above that metrics employ in their drive for more, such as reaction (referred to by users as addiction), competition, etc. However, as one might expect, many people want the numbers. One prominent blogger wrote me, in response to the way Demetricator removes metrics: “Yeah, but those of us with good metrics want to show them. :-)” Just as metrics want more, most want more metrics.

Perhaps the most interesting feedback I’ve received reveals the self-imposed rules users have crafted around Facebook’s quantifications of social interaction. On the topic of “likes” and comments, one user wrote me about how, with Demetricator installed, he was no longer able to like anything. “I found myself not [wanting] to comment on things as often because I wouldn’t know if it had a lot of comments or likes already.”109 This is because he didn’t want to be one of the only people to like something that turned out to be unpopular. Another user told me that now she doesn’t know what to like because, if something already has say, twenty-five likes, then surely that person doesn’t need any more likes from her. They have enough already!

Users who create self-imposed rules around “like” behavior appear to be developing strategies in an attempt to manage the effects of “like” metrics. Their rules are limiting structures that try to minimize the extension of social risk or the future loss of social capital. The first user described above tries to limit his social risk by withholding his likes from posts that might turn out to be unpopular. The second user seems to believe attention within Facebook is finite, that too many likes given to another user leaves too little for the rest—including herself. In other words, she foresees a potential shortage of symbolic capital and adjusts her liking behavior accordingly. For both users, occasional inaction emerges as their method of coping with metrics that want more.

“Like”-limiting rules also suggest attempts to resist homogenization and automation. If a user gives a like to everyone and everything without discrimination, then that user’s interests or tastes are undistinguished. Such indiscriminate liking also suggests an automated user, a participant whose agency is driven by opportunity rather than choice. Waiting to like something until a post is proven popular doesn’t distinguish users, but rather further homogenizes them. Withholding likes from very popular posts limits users’ options to “like” posts below some threshold. For these users, the metrics are still guiding their behavior.

Timestamp demetrication is the software feature I’ve received the most requests to make optional or remove. Many users no longer knew if they could comment on or “like” something without the timestamps because they had created rules for themselves about how new something had to be if they were going to contribute their like. One user mentioned a two-day rule (no likes on posts older than two days); others didn’t specify a threshold. Some said they became passive without the timestamps, that without them they stopped commenting and liking altogether. The unease was high enough such that one user hacked the code to undemetricate the timestamps, and sent me the change as a proposed fix.

This unease with timestamp demetrication illustrates that users understand the value of their “like”—the primary form of capital in Facebook—within temporal terms. They believe that newer likes are worth more while older likes are worth less. User estimation of the value of an older like is so low, that, without a clear, undemetricated view of time, some users stopped interacting altogether.

However, the idea that users have a clear picture of time, without demetrication, is an illusion. While Demetricator certainly obfuscates timestamps, Facebook does the same thing—just with more differentiated categories of time. As I described earlier, when timestamps get older, they get less specific, increasingly hiding their precise placement within the timeline of posts. This obfuscation serves the needs of Facebook’s News Feed algorithm, the undisclosed formula that the corporation uses to decide what appears where and when within the news feed. As a post gets older, generalized timestamp language disguises any temporal rearrangement the algorithm performs. Generalized timestamp language also serves to hide post removals by the News Feed algorithm, as temporal gaps in the timeline become less obvious. Yet, the timestamp rules revealed by Demetricator show that, while users may not have a sophisticated understanding of the value placed on time by Facebook, they know enough to modulate their understanding of a “like’s” value in relation to the present. Facebook’s timestamp metrics want users to stay engaged with the system by spending their likes on posts that are new, and by doing so now.

In many of these cases above—from those unburdened of reactive tendencies to the users no longer feeling competitive with their friends—Demetricator has released its users from the interaction patterns that Facebook metrics impose. But perhaps more importantly, Demetricator has also served to reveal the existence of those patterns in the first place. By removing the numbers, users start to see what metrics want.

Conclusion

Facebook has become a primary space of interaction, but it is a private, mediated space, not the public town square of old—or even the relatively free spaces of Usenet or online forums. Demetricator intervenes into this new private social space in order to help us understand that Facebook is not a neutral facilitator of interaction. Demetricator reveals how Facebook draws on our deeply ingrained “desire for more,” compelling us to reimagine friendship as a quantitative space, and pushing us to watch the metric as our guide. But the metric is an agent of the system, a thing with intention that adheres to various powers, be they designers, programmers, Facebook the corporation, or the system itself. It places us within a graphopticon, asking us to evaluate the metrics of our friends while at the same time internalizing our need to excel quantitatively. The metric draws us in by focusing us on the now so that we stay active within the system, producing the content it needs to survive. It homogenizes our individuality, making us easier to categorize and market to while limiting our ability to distinguish ourselves. The metric wants what the system needs: more friends, more “likes,” more comments, more photos, more connections, and more points of analysis. Through its metrics, Facebook imposes patterns of interaction on us, changing what we say to each other and guiding how we think about each other. Demetricator, through its removal of the metrics, both reveals and eases these prescribed patterns of sociality. It shows us what the metrics want. The metrics want more.

Acknowledgements

I wish to thank a number of individuals in relation to this project. Kevin Hamilton, Laurie Hogin, Ryan Griffis, Kate McDowell, two anonymous reviewers, and Olga Goriunova all provided invaluable feedback on drafts of this text; without their help this article would not be published. Matthew Fuller’s interview of me about Demetricator as well as my presentation at the Institute of Network Cultures’ Unlike Us conference were crucial for my development of the ideas in this text. Christiane Paul lent early support and curated Demetricator into her exhibit “The Public Private” at Parsons in New York. Finally, this project was partially funded by a Terminal Award; I appreciate Terminal and Barry Jones’ financial support.

Bibliography

Adam, Alison. “Lists.” In Software Studies \ A Lexicon, edited by Matthew Fuller, 174-178. Cambridge, MA: MIT Press, 2008.

Antonellis, Anthony. “Facebook Bliss.” net art, 2012. http://www.anthonyantonellis.com/bliss

Baudrillard, Jean. Simulacra and Simulation. Ann Arbor, MI: University of Michigan Press, 1994.

Bauman, Zygmunt. Liquid Modernity. Cambridge, UK: Polity Press, 2000.

Bennett, Jane. Vibrant Matter: A Political Ecology of Things. Durham, NC: Duke University Press, 2010.

Bentham, Jeremy. The Panopticon Writings, edited by Miran Bozovic, 29-95. London: Verso, 1995.

Bogost, Ian. “Cow Clicker.” Facebook Application, 2010. http://www.bogost.com/blog/cow_clicker_1.shtml

Bourdieu, Pierre. Distinction: A Social Critique of the Judgment of Taste. Cambridge, MA: Harvard University Press, 1984.

Bourdieu, Pierre. Language and Symbolic Power. Cambridge, MA: Harvard University Press, 1991.

Bucher, Taina. “Programmed sociality: A software studies perspective on social networking sites.” PhD diss., University of Oslo, 2012.

Bucher, Taina. “Want to be on the top? Algorithmic power and the threat of invisibility on Facebook.” New Media & Society 14, no. 7 (2012): 1164-80.

Campanelli, Vito. Web Aesthetics: How Digital Media Affect Culture And Society. Rotterdam: NAi, 2010.

Coole, Diana and Samantha Frost. Introduction to New Materialisms: Ontology, Agency, and Politics, ed. Coole and Frost, 2-43. Durham, NC: Duke University Press, 2010.

Cortright, Petra. “Video Catalog.” net art, 2007-present. http://www.petracortright.com/gallery/.

Crosby, Alfred W. The Measure of Reality: Quantification in Western Europe, 1250-1600. New York, NY: Cambridge University Press, 1996.

Dean, Jodi. “Communicative Capitalism: Circulation and the Foreclosure of Politics.” Cultural Politics 1, no. 1 (2005): 51–74.

Deci, Edward, and Richard Ryan. Intrinsic Motivation and Self-Determination in Human Behavior. New York: Plenum, 1985.

Fisher, Mark. Capitalist Realism: Is There No Alternative? Ropley, UK: Zer0 Books, 2010.

Fuller, Matthew. Behind the Blip: Essays on the Culture of Software. Brooklyn, NY: Autonomedia, 2003.

Fuller, Matthew. Introduction to Software Studies \ A Lexicon, ed. Matthew Fuller, 1-13. Cambridge, MA: MIT Press, 2008.

Fuller, Matthew and Andrew Goffey. Evil Media. Cambridge, MA: MIT Press, 2012.

Galloway, Alexander. Protocol: How Control Exists After Decentralization. Cambridge, MA: MIT Press, 2004.

Gehl, Robert W. “A Cultural and Political Economy of Web 2.0.” PhD diss., George Mason University, 2010.

Gehl, Robert W. Reverse Engineering Social Media: Software, Culture, and Political Economy in New Media Capitalism. Philadelphia, PA: Temple University Press, 2014.

Gell, Alfred. Art and Agency: An Anthropological Theory. Oxford: Clarendon Press, 1998.

Gerlitz, Carolin and Anne Helmond. “The Like economy: Social buttons and the data-intensive web.” New Media & Society (published online February 4, 2013), accessed March 12, 2013. doi: 10.1177/1461444812472322.

Gitelman, Lisa. Introduction to “Raw Data” Is an Oxymoron, ed. Lisa Gitelman, 1-14. Cambridge, MA: MIT Press, 2013.

Goffey, Andrew. “Algorithm.” In Software Studies \ A Lexicon, ed. Matthew Fuller, 21-30. Cambridge, MA: MIT Press, 2008.

Graeber, David. Debt: The First 5,000 Years. Brooklyn, NY: Melville House, 2011.

Grosser, Benjamin. “Don’t Give Me the Numbers—an interview with Ben Grosser about Facebook Demetricator.” Interview by Matthew Fuller, Rhizome, November 15, 2012. http://rhizome.org/editorial/2012/nov/15/dont-give-me-numbers-interview-ben-grosser-about-f/

Grosser, Benjamin. “Facebook Demetricator.” net art, 2012-present. http://bengrosser.com/projects/facebook-demetricator/

Grosser, Benjamin. “How the Technological Design of Facebook Homogenizes Identity and Limits Personal Representation.” Hz 19, 2014. http://www.hz-journal.org/n19/grosser.html

Grosser, Benjamin. “Reload The Love!” net art, 2011. http://bengrosser.com/projects/reload-the-love/

Harvey, David. Seventeen Contradictions and the End of Capitalism. New York, NY: Oxford University Press, 2014.

Horkheimer, Max, and Theodor Adorno. Dialectic of Enlightenment. Stanford, CA: Stanford University Press, 2002.

I/O/D. “The Web Stalker.” net art, 1997. http://bak.spc.org/iod/ .

Jurgenson, Nathan. “Review of Ondi Timoner’s We Live in Public.” Surveillance & Society 8, no. 3 (2010): 374-378.

Karppi, Tero. “Death Proof: on the Biopolitics and Noopolitics of Memorializing Dead Facebook Users.” Culture Machine 14 (2013).

Langlois, Ganaele, Greg Elmer, Fenwick McKelvey, and Zachary Devereaux. “Networked Publics: The Double Articulation of Code and Politics on Facebook.” Canadian Journal of Communication 34 (2009): 415-434.

Latour, Bruno. Reassembling the Social: An Introduction to Actor-Network-Theory. New York: Oxford University Press, 2005.

Manovich, Lev. Software Takes Command. softwarestudies.com: 2008, accessed April 1, 2013, http://softwarestudies.com/softbook/manovich_softbook_11_20_2008.pdf (draft).

Marwick, Alice E. “The Public Domain: Social Surveillance in Everyday Life.” Surveillance & Society 9, no. 4 (2012): 378-393.

Marx, Karl, and Friedrich Engels. The Communist Manifesto. London: Pluto Press, 2008.

Maslow, Abraham. “A Theory of Human Motivation.” Psychological Review 50, no. 4 (1943): 370-396.

Mathiesen, Thomas. “The Viewer Society: Michel Foucault’s ‘Panopticon’ Revisited.” Theoretical Criminology 1, no. 2 (May, 1997): 215-234.

Michell, Joel. “Epistemology of Measurement: The Relevance of its History for Quantification in the Social Sciences.” Social Science Information 42, no. 4 (2003): 515-534.

Oliver, Julian and Daniil Vasilev. “Newstweek.” net art, 2013. http://julianoliver.com/output/newstweek.

O’Reilly, Tim. “What is Web 2.0: Design Patterns and Business Models for the Next Generation of Software.” Dated September 30, 2005, accessed April 4, 2013. http://oreilly.com/web2/archive/what-is-web-20.html

Porter, Theodore. Trust in Numbers: The Pursuit of Objectivity in Science and Public Life. Princeton, NJ: Princeton University Press, 1995.

Ryan, Richard, and Edward Deci. “Intrinsic and Extrinsic Motivations: Classic Definitions and New Directions.” Contemporary Educational Psychology 25 (2000): 54-67.

Rushkoff, Douglas. Present Shock: When Everything Happens Now. New York: Penguin, 2013.

Schäfer, Mirko Tobias. Bastard Culture!: How User Participation Transforms Cultural Production. Amsterdam: Amsterdam University Press, 2011.

Shore, Cris and Susan Wright. “Audit Culture and Anthropology: Neo-Liberalism in British Higher Education.” The Journal of the Royal Anthropological Institute, 5, no. 4 (1999): 557-575.

Van Dijck, José. “Facebook as a Tool for Producing Sociality and Connectivity.” Television & New Media 13, no. 2 (February 15, 2012): 160–176. doi:10.1177/1527476411415291.

Veenhof, Sander. “Publicity Plant.” net art and physical installation, 2009. http://sndrv.nl/publicityplant/ .

Wall Street: Money Never Sleeps, directed by Oliver Stone (2010: 20th Century Fox), DVD.

Zuckerberg, Mark. “Building the Social Web Together.” Facebook.com, April 21, 2010, https://www.facebook.com/notes/facebook/building-the-social-web-together/383404517130 .

Author Biography

Artist Benjamin Grosser focuses on the cultural, social, and political implications of software. His works have been exhibited at venues and festivals including Eyebeam in new York, The White Building in London, and the FILE Festival in São Paulo. His works have been featured in Wired, The Atlantic, The Guardian, the Los Angeles Times, Creative Applications Network, Neural, Rhizome, Corriere della Sera, El País, Der Spiegel, and The New Aesthetic. The Huffington Post said of his Interactive Robotic Painting Machine that “Grosser may have unknowingly birthed the apocalypse.” The Chicago Tribune called him the “unrivaled king of ominous gibberish.” Slate referred to his work as “creative civil disobedience in the digital age.” Grosser’s recognitions include commissions and awards from Rhizome, Terminal, and Creative Divergents. He holds an MFA in new media and an MM in music composition from the University of Illinois at Urbana-Champaign, where he teaches in the School of Art & Design.

Website: http://bengrosser.com

Mail: grosserATbengrosser.com

Notes

- Wall Street: Money Never Sleeps, directed by Oliver Stone (2010: 20th Century Fox), DVD. ↩

- Graeber, David. Debt: The First 5,000 Years (Brooklyn, NY: Melville House, 2011), 346. ↩

- “Facebook Reports Second Quarter 2014 Results,” investor.fb.com, accessed May 9, 2013, http://investor.fb.com/releasedetail.cfm?ReleaseID=861599. ↩

- “Alexa Top 500 Global Sites,” Alexa, accessed April 12, 2013, http://www.alexa.com/topsites. At times Facebook has been number one on this list, but currently lags behind Google. ↩

- Schäfer, Mirko Tobias. Bastard Culture!: How User Participation Transforms Cultural Production (Amsterdam: Amsterdam University Press, 2011), 11. ↩

- “The Power of Facebook Advertising—by the numbers.” Facebook. Accessed April 14, 2013, http://www.facebook.com/business/power-of-advertising. ↩

- Marx, Karl, and Friedrich Engels. The Communist Manifesto (London: Pluto Press, 2008), 37. ↩

- Maslow, Abraham H. “A Theory of Human Motivation,” Psychological Review 50, no. 4 (1943): 381. ↩

- Deci, Edward, and Richard Ryan. Intrinsic Motivation and Self-Determination in Human Behavior (New York: Plenum, 1985), 64. ↩

- Fisher, Mark. Capitalist Realism (Ropley, UK: Zer0 Books, 2010), 2. ↩

- Jodi Dean notes that online political comments challenging existing ideology feed a “communicative capitalism” that thrives on the circulation of such opinions without enacting change. See: Dean, Jodi. “Communicative Capitalism: Circulation and the Foreclosure of Politics.” Cultural Politics 1, no. 1 (2005): 51–74. ↩

- Fisher, Capitalist Realism, 4. ↩

- Marx, Communist Manifesto, 7. ↩

- Fisher, Capitalist Realism, 16. ↩

- Harvey, David. Seventeen Contradictions and the End of Capitalism (New York, NY: Oxford University Press, 2014), 222. ↩

- Fisher, Capitalist Realism, 18-19. ↩

- Shore, Cris, and Susan Wright. “Audit Culture and Anthropology: Neo-Liberalism in British Higher Education,” The Journal of the Royal Anthropological Institute 5, no. 4 (1999): 558-563. ↩

- Crosby, Alfred W. The Measure of Reality: Quantification in Western Europe, 1250-1600 (New York, NY: Cambridge University Press, 1996), 11-12. ↩

- Michell, Joel. “Epistemology of Measurement: The Relevance of its History for Quantification in the Social Sciences,” Social Science Information 42, no. 4 (2003): 531. ↩

- Porter, Theodore. Trust in Numbers: The Pursuit of Objectivity in Science and Public Life (Princeton, NJ: Princeton University Press, 1995), 197. ↩

- Porter, Trust in Numbers, 25. ↩

- Bourdieu, Pierre. Language and Symbolic Power (Cambridge, MA: Harvard University Press, 1991), 122. ↩

- Goffey, Andrew. “Algorithm,” in Software Studies \ A Lexicon, ed. Matthew Fuller (Cambridge, MA: MIT Press, 2008), 21-30. ↩

- Manovich, Lev. Software Takes Command (softwarestudies.com: 2008), accessed April 1, 2013, http://softwarestudies.com/softbook/manovich_softbook_11_20_2008.pdf (draft), 108. ↩

- Fuller, Matthew. Introduction to Software Studies \ A Lexicon, edited by Matthew Fuller (Cambridge, MA: MIT Press, 2008), 2. ↩

- Galloway, Alexander. Protocol: How Control Exists After Decentralization (Cambridge, MA: MIT Press, 2004), 3. ↩

- Fuller, Matthew. Behind The Blip: Essays on the Culture of Software (Brooklyn, NY: Autonomedia, 2003), 19. ↩

- “Key Facts,” Facebook.com, accessed April 28, 2013, http://newsroom.fb.com/Key-Facts. ↩

- Computational Culture, http://computationalculture.net. ↩

- Software Studies, MIT Press Book Series, http://mitpress.mit.edu/books/series/software-studies ↩

- Gerlitz, Carolin. and Anne Helmond. “The Like economy: Social buttons and the data-intensive web,” New Media & Society (published online February 4, 2013). ↩

- Gehl, Robert W. Reverse Engineering Social Media: Software, Culture, and Political Economy in New Media Capitalism (Philadelphia, PA: Temple University Press, 2014). ↩

- Grosser, Benjamin. “How the Technological Design of Facebook Homogenizes Identity and Limits Personal Representation,” Hz 19 (2014), http://www.hz-journal.org/n19/grosser.html . ↩

- Gehl, Robert. “A Cultural and Political Economy of Web 2.0,” (PhD diss., George Mason University, 2010),12. ↩

- Bucher, Taina. “Programmed sociality: A software studies perspective on social networking sites,” (PhD diss., University of Oslo, 2012). ↩

- Bucher, Taina. “Want to be on the top? Algorithmic power and the threat of invisibility on Facebook,” New Media & Society, 14, no. 7 (2012). ↩

- Karppi, Tero. “Death Proof: on the Biopolitics and Noopolitics of Memorializing Dead Facebook Users,” Culture Machine 14 (2013). ↩

- Langlois, Ganaele, Greg Elmer, Fenwick McKelvey, and Zachary Devereaux. “Networked Publics: The Double Articulation of Code and Politics on Facebook,” Canadian Journal of Communication 34 (2009): 415-434. ↩

- Van Dijck, José. “Facebook as a Tool for Producing Sociality and Connectivity,” Television & New Media 13, no. 2 (February 15, 2012): 160–176. ↩

- Cortright, Petra. “Video Catalog,” net art, 2007-present, http://www.petracortright.com/gallery/. ↩

- Bogost, Ian. “Cow Clicker,” Facebook Application, 2010. Also see: http://www.bogost.com/blog/cow_clicker_1.shtml. ↩

- Antonellis, Anthony. “Facebook Bliss,” net art, 2012. http://www.anthonyantonellis.com/bliss. ↩

- Other works include: Veenhof, Sander. “Publicity Plant,” net art and physical installation, 2009; “Internet Famous” by students in Parsons Design & Technology Program, 2008; and Grosser, Benjamin. “Reload The Love!,” net art, 2011. ↩

- Grosser, “How the Technological Design of Facebook Homogenizes Identity and Limits Personal Representation.” ↩

- “The Value of a Liker,” Facebook.com, accessed September 1, 2014, https://www.facebook.com/note.php?note_id=150630338305797 . ↩

- Gerlitz and Helmond, “The Like economy: Social buttons and the data-intensive web,” 12. ↩

- Ibid. ↩

- Alison, Adam. “Lists,” in Software Studies \ A Lexicon, ed. Matthew Fuller (Cambridge, MA: MIT Press, 2008), 174. ↩

- Gehl, “A Cultural and Political Economy of Web 2.0,” 12. ↩

- During August 2014 I conducted a variety of experiments using test users on Facebook to evaluate how the news feed changes for new users. I tried connecting test users to both other new test users as well as to established Facebook accounts. In every case, a user without a friend or a “like” has an empty news feed. Once a user adds a friend or “likes” a Page, their feed beings to show (in fact, is dominated by) whatever content their new connection produces. As such, this early stage is also dependent on how active the new connections are. ↩

- Eventually, after continued “friending,” a user’s feed becomes too noisy. Facebook manages this with its News Feed algorithm, filtering each user’s news feed so that, among other things, they aren’t overwhelmed with posts. One effect of the filtering is that it discourages the user from removing friends to self-filter their feed. Facebook also allows users to hide all posts from a specified friend—anything to keep the user from removing the friend altogether. ↩

- Bourdieu, Pierre. Language and Symbolic Power, 72. ↩

- Bourdieu, Pierre. Distinction: A Social Critique of the Judgment of Taste (Cambridge, MA: Harvard University Press, 1984), 66. ↩

- Shore, “Audit Culture and Anthropology,” 558. ↩

- Fuller, Matthew, and Andrew Goffey. Evil Media (Cambridge, MA: MIT Press, 2012), 130. ↩

- Shore, “Audit Culture and Anthropology.” ↩

- Bentham, Jeremy. The Panopticon Writings, ed. Miran Bozovic (London: Verso, 1995), 29-95. ↩

- Mathiesen, Thomas. “The Viewer Society: Michel Foucault’s ‘Panopticon’ Revisited,” Theoretical Criminology 1, no. 2 (May, 1997): 215-234. ↩

- See also: Bauman, Zygmunt. Liquid Modernity (Cambridge, UK: Polity Press, 2000), 85-86. ↩

- Jurgenson, Nathan. ”Review of Ondi Timoner’s We Live in Public,” Surveillance & Society 8, no. 3 (2010): 377. ↩

- O’Reilly, Tim. “What is Web 2.0: Design Patterns and Business Models for the Next Generation of Software” (dated September 30, 2005, accessed April 4, 2013). ↩

- Marwick, Alice E. “The Public Domain: Social Surveillance in Everyday Life,” Surveillance & Society, 9, no. 4 (2012): 378-393. ↩

- Zuckerberg, Mark. “Building the Social Web Together,” Facebook.com, April 21, 2010. https://www.facebook.com/notes/facebook/building-the-social-web-together/383404517130. ↩

- See https://www.facebook.com/business/news/News-Feed-FYI-A-Window-Into-News-Feed and https://www.facebook.com/help/327131014036297/ for more about the News Feed algorithm. ↩

- Bucher, “Want to be on the top? Algorithmic power and the threat of invisibility on Facebook,” 1167. ↩

- Bucher, “Want to be on the top? Algorithmic power and the threat of invisibility on Facebook,” 1166. ↩

- Fuller, Evil Media, 131. ↩

- “Keeping up with the Joneses” is an American idiom that means “trying to match the lifestyle of one’s more affluent neighbors or acquaintances.” See “Keep up” in The American Heritage Dictionary of Idioms (Boston: Houghton Mifflin, 2013), 251. ↩

- See: Jordi Galí, ”Keeping up with the Joneses: Consumption Externalities, Portfolio Choice, and Asset Prices,” Journal of Money, Credit and Banking, 26, no. 1 (Feb., 1994), 1-8. ↩

- Fuller, Evil Media, 130. ↩

- There are various reasons for this, including the increasing page length resulting in a larger (and thus, slower to load) source file, and the increasing amount of processing required by browser-side scripts that work with that source file. ↩

- Rushkoff, Douglas. Present Shock: When Everything Happens Now (New York: Penguin, 2013). ↩

- Rushkoff, Present Shock, location 78/4641 (Kindle Edition). ↩

- Gehl, Reverse Engineering Social Media: Software, Culture, and Political Economy in New Media Capitalism, 42. ↩

- Porter, Trust in Numbers, ix. ↩

- Ibid. ↩

- Porter, Trust in Numbers, 32. ↩

- Porter, Trust in Numbers, 77. ↩

- Gell, Alfred. Art and Agency: An Anthropological Theory (Oxford: Clarendon Press, 1998), 15. ↩

- Gell, Art and Agency, 17. ↩

- We could and perhaps should also include here the designers of the interface, the directors of the corporation, the shareholders of Facebook, the advertisers whose needs thrive on metrics, and any other entity that has had a hand in its development. ↩

- Coole, Diana and Samantha Frost. Introduction to New Materialisms: Ontology, Agency, and Politics, edited by Coole and Frost (Durham, NC: Duke University Press, 2010), 9-10. ↩

- Bennett, Jane. Vibrant Matter: A Political Ecology of Things (Durham, NC: Duke University Press, 2010), viii. ↩

- Latour, Bruno. Reassembling the Social: An Introduction to Actor-Network-Theory (New York: Oxford University Press, 2005), 71. ↩

- Gitelman, Lisa. Introduction to “Raw Data” Is an Oxymoron, ed. Lisa Gitelman (Cambridge, MA: MIT Press, 2013), 8. ↩

- Ryan, Richard, and Edward Deci. “Intrinsic and Extrinsic Motivations: Classic Definitions and New Directions.” Contemporary Educational Psychology 25 (2000): 62. Ryan and Deci discuss anxiety as an extrinsic motivation called introjected regulation, an “internal regulation that is still quite controlling because people perform such actions with the feeling of pressure in order to avoid guilt or anxiety or to attain ego-enhancements or pride.” ↩

- Fisher, Capitalist Realism, 73. Paraphrasing Spinoza from Ethics. ↩

- Grosser, “How the Technological Design of Facebook Homogenizes Identity and Limits Personal Representation.” ↩

- Horkheimer, Max, and Theodor Adorno. Dialectic of Enlightenment (Stanford, CA: Stanford University Press, 2002), 4. ↩

- While Facebook allows users to create new Pages and to associate with other users in a way that approaches ideas of the “long tail” (see “The Long Tail”, Wired, October, 2004) the site’s structure still forces those users to conform to existing groupings whenever possible. This results in significantly less difference than would otherwise occur. I explore this further in my Hz article under the heading “Community Pages and the Consolidation of Interest” (Grosser, 2014), though additional research is warranted regarding the conflict between Facebook’s desire for consolidation and its need for new markets. ↩

- Gerlitz and Helmond, “The Like economy: Social buttons and the data-intensive web,” 11. ↩

- Baudrillard, Jean. Simulacra and Simulation (Ann Arbor, MI: University of Michigan Press, 1994). ↩

- For works that examine how minority identities are affected by online spaces see Brookey, R.A., “Sex Lives in Second Life,” Critical Studies in Media Communication 26 no. 2, 2009), 145-164; Grasmuck, S., “Ethno-Racial Identity Displays on Facebook,” Journal of Computer-Mediated Communication 15, 2009), 158-188; Nakamura, L., Digitizing Race: Visual Cultures of the Internet (Minneapolis, MN: University of Minnesota Press, 2007); Nakamura, L. (ed.), Race After the Internet (New York: Routledge, 2012); Watkins, C., The Young and the Digital: What the Migration to Social Network Sites, Games, and Anytime, Anywhere Media Means for our Future (Boston: Beacon Press, 2009). ↩

- Grosser, “How the Technological Design of Facebook Homogenizes Identity and Limits Personal Representation.” ↩

- Campanelli, Vito. Web Aesthetics: How Digital Media Affect Culture And Society (Rotterdam: NAi, 2010), 96. ↩

- I am certainly not the first artist to take on software as a subject of inquiry; for example, see I/O/D’s Web Stalker and Julian Oliver’s Newstweek. ↩

- The project site is http://bengrosser.com/projects/facebook-demetricator/. The Github source code repository is http://github.com/bengrosser/facebook-demetricator/. ↩

- Note that even though Demetricator hides the metrics, they are still just as active behind the scenes informing the News Feed algorithm’s decisions about how to rank the content it presents. Users are generally aware of this, though an “out of sight, out of mind” approach may contribute to their experience. ↩

- Press coverage of the project has been across divergent sources, from the Los Angeles Times to Gizmodo. For a comprehensive listing, see http://bengrosser.com/blog/press-coverage-of-facebook-demetricator/. ↩

- Gregorian, October 22, 2012 (11:25 p.m.), comment on Amar Toor, “Demetricator extension removes ‘like’ and friend counts from your Facebook page,” The Verge, October 22, 2012, http://www.theverge.com/2012/10/23/3541610/facebook-demetricator-browser-extension-chrome-safari-firefox. ↩

- Omnislip, October 24, 2012 (8:49 a.m.), comment on Casey Chan, “Using Facebook Without Numbers is Like Growing Up Without Peer Pressure,” Gizmodo, October 23, 2012, http://gizmodo.com/5954344/using-facebook-without-numbers-is-like-growing-up-without-peer-pressure. ↩

- Fanelli, Jena, “Sucking the fun out of Facebook,” October 31, 2012 (11:03 a.m.), Jena’s CIS Blog, http://jenafanelli.blogspot.com/2012/10/sucking-fun-out-of-facebook.html. ↩

- Grosser, Facebook Demetricator / Feedback, http://bengrosser.com/projects/facebook-demetricator/#feedback. ↩

- Jared, e-mail message to author, October 30, 2012. ↩

- Marley, e-mail message to author, November 8, 2012. ↩

- Jessie, e-mail message to author, October 22, 2012. ↩

- Nicholas, e-mail message to author, October 24, 2012. ↩

- Alexandros, e-mail message to author, November 18, 2012. ↩

- Rachel, e-mail message to author, December 12, 2012. ↩