Google’s Neural Machine Translation

In September 2016, Google researchers announced a new system that would soon become known as Google Neural Machine Translation (GNMT).1 This marked a shift from the statistical machine translation (SMT) model employed by the tech firm since 2007 to a deep-learning based recurrent neural-network model, which not only replaced phrase-level translation with sentence-level translation but also made possible zero-shot translation—direct translation between language pairs on which the system had never been trained—without the mediation of an interlingua such as English.2 Google rolled out the service for eight languages in November 2016 and expanded it to roughly twenty languages by April 2017.

Machine translation techniques are classified into rule-based systems that implement grammatical rules, statistical systems typified by phrase-based models, and, more recently, neural-network systems. Although the origin of machine translation can be traced back to Descartes’ 1629 proposal of a universal language whose words share a single symbolic code,3 modern machine translation has its roots in the cryptanalytic technologies that advanced rapidly during the Second World War.4 Subsequently, machine translation began to take shape as a practical technology when Warren Weaver—now regarded as a pioneer of the field—outlined theoretical and technical pathways for computer-based translation grounded in early neural network research, linguistics, and cryptanalysis in his 1949 memorandum ‘Translation’, and when computational linguist R.H. Richens published his 1958 paper ‘Interlingual Machine Translation’. Translation services aimed at the general public, of the sort familiar today, have been available only since 1997, when Systran’s Babelfish system went live on AltaVista. Subsequent advances have produced a range of rule-based and phrase-based systems, but the introduction of neural machine translation systems, typified by GNMT, brought about a decisive leap in translation quality.

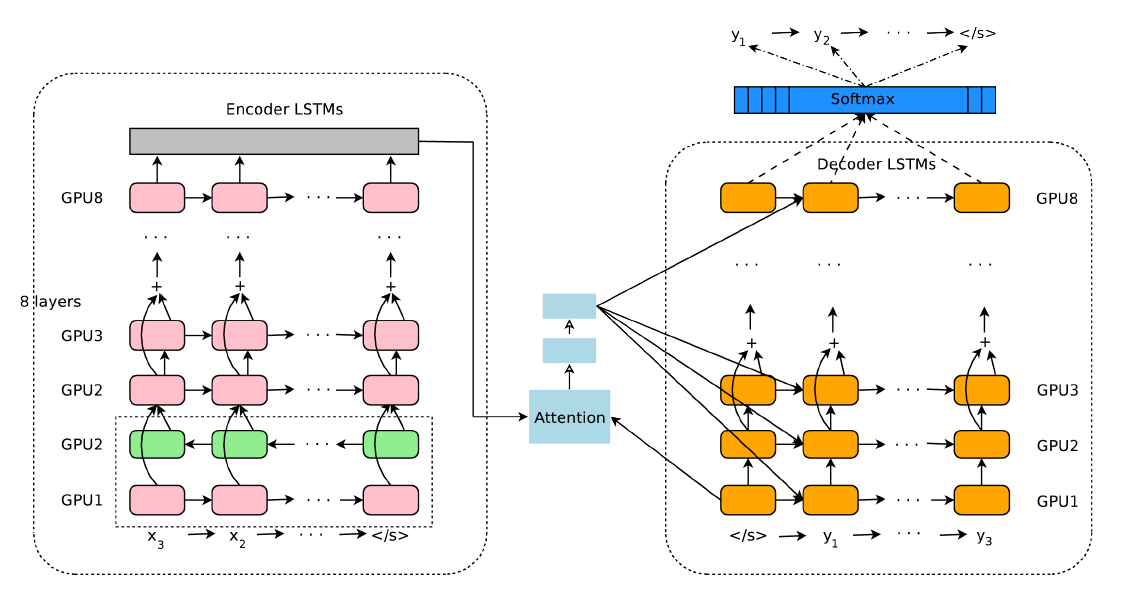

GNMT is built on a neural network called long short-term memory (LSTM), a particular kind of recurrent neural network (RNN). As Figure 1 shows, the system at that time comprised of three components—an eight-layer encoder network that reads the source sentence, an eight-layer decoder network that writes it in a new language, and an attention module that identifies the phrases to which the translation should give priority.5 More specifically, the encoder divides the given sentence into words (or signs) and assigns a vector value to each one. In other words, it converts the sentence into a set of vectors with a fixed number of dimensions.6 Receiving a sequence of vectors as input, the decoder generates the words that will form a sentence in the target language, one word at a time. The encoder and decoder are linked by the attention module, which assigns different weights to each word in the source sentence while the decoder predicts the sentence word by word.7 For instance, when translating the Korean sentence ‘철수는 야구를 한다(Cheol-Su plays baseball)’ into English, a system trained on a large corpus of aligned sentences will pay more attention to the word ‘야구(baseball)’ as it composes the English sentence.

Figure 1. The architecture of GNMT

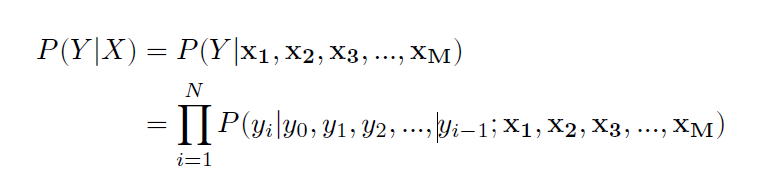

GNMT is based on sequence-to-sequence learning, developed in 2014 by Google’s own research team.8 This means that, instead of the traditional phrase-level training, the model is trained at the level of sequences—that is, sentences. The problem is that accuracy declines as the character string grows longer, and the solution, as noted above, is to convert every sentence, regardless of length, into a fixed number of vectors that can in principle cover all the words that make up the sentence. Accordingly, sentences presented in various lengths are transformed into vector representations with fixed dimensions. The training process for sentence pairs, which proceeds through encoding and decoding, is expressed by the following conditional probability:9

Here, x₁, x₂, x₃ … xM denote the vectors of the input sentence, and y₀, y₁, y₂ … yi−1 denote the words to be generated in decoding. In other words, when the decoder predicts the output one word at a time, taking into account the weights imposed by the attention mechanism, the probability of the next word is estimated from the vector values of the source words and the previously generated words (together with their weights). Furthermore, as the words are refined through the eight layers, they are integrated by a softmax operation and ultimately assembled into a single sentence. As a full technical description of GNMT’s training and translation process is beyond the scope of this article, we will now turn to the implications of this translation system.

When Google announced its new GNMT system in 2016, it stressed not only that the model surpassed earlier phrase-level approaches by combining techniques such as the sequence-to-sequence learning it had introduced a few years earlier10 with attention mechanisms then gaining traction in natural-language processing and image based object recognition, but also another point—the system’s capacity to perform zero-shot translation.11 In other words, GNMT could translate between language pairs on which it had never been trained.12

How, then, is this possible? The answer unfolds in two steps. When GNMT is trained within a single multilingual framework on examples from different language pairs—for instance, Korean-English and Japanese-English—it shares parameters by transferring the translation knowledge gained from one pair to the other.13 Assuming such transfer learning is viable, the research team inferred that translation between untrained language pairs would also be possible, and experiments confirmed this.14 Thus, GNMT trained on Korean-English and Japanese-English successfully translated between Korean and Japanese, a pair on which it had never been trained. Such zero-shot translation through transfer learning without the mediation of an interlingua was unprecedented in the history of machine translation.15

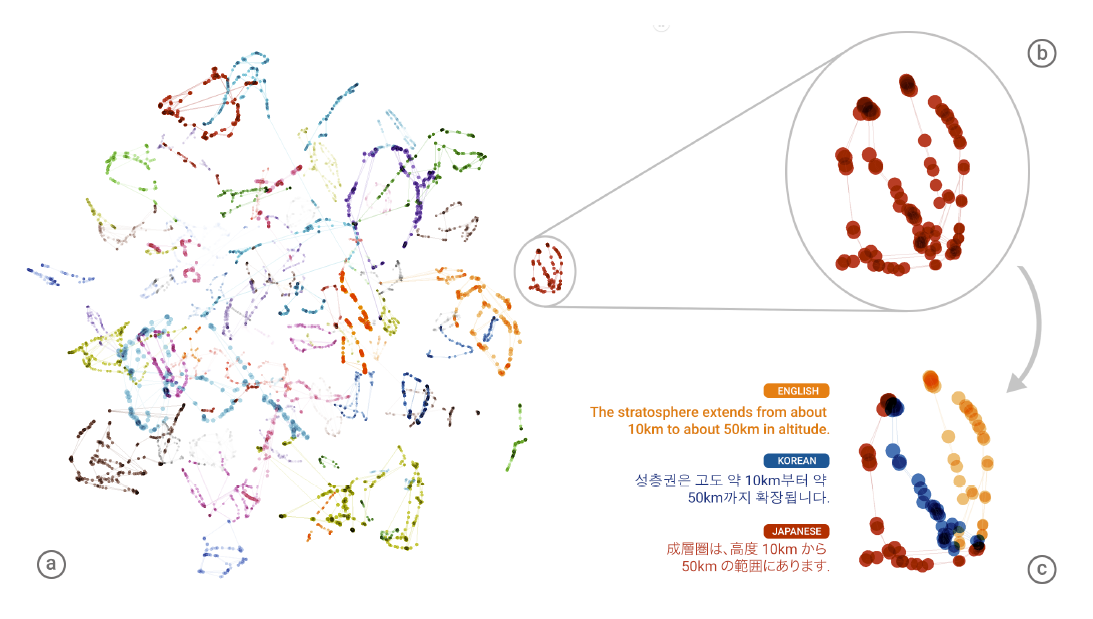

Figure 2. A t-SNE visualisation of GNMT’s representation of sentences

Given that the network shares parameters, can that sharing be made visible? The researchers asked: “Is the network learning some sort of shared representation, in which sentences with the same meaning are represented in similar ways regardless of language?”16 Here, ‘shared representation’ refers to an interlingua.17 To test this, they took a small set of sentences and rendered, in three dimensions, the ‘attention vectors’ that link encoding and decoding. Figure 6 visualises, via t-SNE, the attention vectors of sentences in three languages generated by a translation system trained on English-Korean and English-Japanese.

According to the researchers’ explanation, (a) is a bird’s-eye view of all translated sentences in which the semantics of each sentence is represented by colour, so that Korean sentences (translated from English) and Japanese sentences (also translated from English) that share the same meaning appear as dots of the same colour, and one can further see that the sentences represented as dots form clusters of distinct colours. (b) is an enlarged view of one such cluster. (c) displays, by language, the sentences that belong to that cluster.18 In other words, English, Korean, and Japanese sentences that are semantically identical lie close together to form a single cluster. This shows that the system is not merely memorising phrase-level translations, as earlier methods did, but is encoding something about the semantics of a sentence. The research team interprets this as “a sign of existence of an interlingua in the network.”

In short, Google’s GNMT learns a kind of ‘shared representation’ that expresses semantically identical sentences in the same way regardless of language, and by means of it performs zero-shot translation between language pairs on which it has not been trained. Google’s researchers take this as evidence for the presence of an interlingua, but is that really the case? Does this technological construct now serve as an interlingua in place of a human language such as English? Has Google at last created Descartes’ universal language through technology? If so, might Google’s interlingua be an ‘alien language’ that humans can neither access nor understand?

Benjamin’s “Pure Language”

Walter Benjamin’s theory of translation is set out in ‘The Task of the Translator’, a text written in 1921 as the preface to his German translation of Charles Baudelaire’s ‘Parisian Scenes’ from The Flowers of Evil, published in 1923.19 There he argues that the task of the translator is the discovery of “pure language”; this concept not only distinguishes his theory from other translation theories but also, as we shall see in detail later, resonates strangely with what machine translation systems such as GNMT are oriented towards.

How, then, does Benjamin’s concept of translation diverge from traditional theories of translation? According to Benjamin, conventional theories pursue accuracy and resemblance because they assume the purpose of translation to be the transmission or dissemination of information. He asserts that a translation, like other artistic forms such as poetry, painting, or music, is not directed toward a reader or recipient.20 Although this may at first be hard to grasp, it shows that Benjamin rejects a reader-response type approach and instead upholds a productive, ontological aesthetics.21 He sums up this stance by declaring that “[t]ranslation is a form.”22 He explains that the laws governing translation are immanent in the original—in other words, the work possesses an immanent “translatability” whether or not it is ever translated.23 Translation ultimately concerns the text itself, independent of human agency. According to Benjamin, particular meanings immanent in the original are revealed through translation. Thus, a translation is significant not merely as a secondary vehicle for faithfully conveying the original, but because it makes transparent what was hidden within the original text. “[A translation] does stand in the closest relationship to the original by virtue of the original’s translatability” which Benjamin sees as a “natural” or “vital” connection.24 The translatability of a text is guaranteed by “God’s remembrance” even if no actual translation is ever produced—that is, even without dependence on human beings.

What does it mean that translatability is immanent in the text itself, regardless of whether translation ever occurs, and that this is guaranteed by “God’s remembrance”? What is the significance of this claim, which verges on the mystical? The answer, Benjamin tells us, lies in the inherent “kinship” among languages, a core concept for his theory of translation.25 Benjamin argues that there is a kinship between languages “because languages are not strangers to one another, but are, a priori and apart from all historical relationships, interrelated in what they want to express.”26 Whereas we commonly regard two languages—say, English and French—to be close by tracing their historical lineage, Benjamin insists that languages are interrelated “a priori”, regardless of such lineage. “God’s remembrance” guarantees precisely this inherent kinship among languages. To see why, we must recall Benjamin’s theory of language: in his 1916 essay ‘On Language as Such and on the Language of Man’, written prior to the essay on translation, Benjamin criticises the bourgeois view that treats language as a mere vehicle of information and proposes instead a mystical view in which things transmit their spiritual essence “in” their own language.27 As “thing-language” lacks ‘sound,’ humans translate this imperfect language into a complete one through the addition of human knowledge.28 At the centre of this translation of thing-language into human language lies “naming,” that which adds knowledge and thereby endows things with meaning. Even though language splintered into many dialects after the Fall, its essence remains—hidden, yet unchanged. This unchanged essence constitutes the inherent kinship that grounds translatability, and it is this that Benjamin, in his essay on translation, calls “God’s remembrance”.

How, then, is this concealed kinship revealed? Benjamin holds that it can be realised through translation.29 As the a priori kinship among languages cannot be laid bare directly, he says, it can emerge only in an “embryonic or intensive form.”30 Translation is precisely the act of revealing, in embryonic form, the inherent a priori relations among languages. What exactly, then, becomes visible when such kinship is revealed? It is “pure language”—Benjamin’s key concept and the focus of our inquiry—and Benjamin goes on to lay out the concept of pure language and to explain its relation to kinship:

If the kinship of languages manifests itself in translations, this is not accomplished through the vague resemblance a copy bears to the original. It stands to reason that resemblance does not necessarily appear where there is kinship. The concept of “kinship” as used here is in accord with its more restricted usage: it cannot be defined adequately by an identity of origin between the two cases, although in defining the more restricted usage the concept of “origin” remains indispensable. Where should one look to show the kinship of two languages, setting aside any historical connection? Certainly not in the similarity between works of literature or in the words they use. Rather, all suprahistorical kinship between languages consists in this: in every one of them as a whole, one and the same thing is meant. Yet this one thing is achievable not by any single language but only by the totality of their intentions supplementing one another: the pure language. Whereas all individual elements of foreign languages—words, sentences, associations—are mutually exclusive, these languages supplement one another in their intentions.31

To summarise Benjamin’s account, translatability rests on the inherent kinship among languages, and translation is not a search for the original meaning as conveyed in one language but an activity oriented toward “pure language,” which resides in the supplementary relation between the language of the original and that of the translator. In the act of translation, we seek, and can verify, the discovery of pure language. Benjamin defines pure language as “the totality of their intentions supplementing one another.” What does it mean for languages to supplement one another in respect of meaning or intention? With the notion of intention in view, he distinguishes between “what is meant” and the “way of meaning,” and explains the idea of pure language through the relation between these two. Taking the word for bread as an example, he notes that the German brot and the French pain share what is meant while differing in the way of meaning, and he explains that, within this relation, pure language emerges in the process of translation:

[T]o be more specific, the way of meaning in them is supplemented in its relation to what is meant. In the individual, unsupplemented languages, what is meant is never found in relative independence, as in individual words or sentences; rather it is in a constant state of flux—until it is able to emerge as the pure language from the harmony of all the various ways of meaning. For a long time it remains hidden in the languages. If, however, these languages continue to grow in this way until the messianic end of their history, it is translation that catches fire from the eternal life of the works and the perpetually renewed life of language.32

Translation is thus a search for the “pure language” hidden within distinct languages; yet the relation between what is meant and the way of meaning cannot be exposed through an inquiry confined to a single language. Benjamin expresses this by saying that “content and language form a certain unity in the original, like a fruit and its skin”.33 It is this “disjunction” between content and language, between what is meant and the way of meaning, that often renders translation difficult and superficial. Pure language emerges only within a relational context, the supplementary relation among languages. For this reason, the poet’s task—directed wholly toward a particular linguistic context—differs from that of the translator, which approaches language in general from the perspective of its totality.34 In short, translation is the effort to uncover the pure language hidden in each individual language while taking account of the totality of relations among languages. “Pure language” is precisely where machine translation, epitomised by GNMT, resonates with Benjamin’s theory. Has GNMT, then, discovered pure language? We shall examine this question in detail later; but for the moment, how should translation itself be carried out?

Benjamin maintains that, in practice, two seemingly contradictory principles—fidelity and licence—must be reconciled if one is to “[ripen] the seed of pure language”.35 He describes these principles as “the freedom to give a faithful reproduction of the sense and, in its service, fidelity to the word.”36 How is this possible in practice? Benjamin underscores the importance of a “literal rendering of the syntax”.37 To that end, he says, the translator must treat the word, not the sentence, as the primary unit of translation. The reason is that, if the sentence is a wall barring access to the source language, literalness is an arcade. This point brings up another important issue in relation to machine translation such as GNMT. Also setting this point aside for the moment, how is translational freedom possible when word-based literal rendering is stressed? Benjamin’s translational freedom seems possible only when word-based literal rendering is raised to a higher level. He illustrates this point through an analogy:

Of necessity, therefore, the demand for literalness, whose justification is obvious but whose basis is deeply hidden, must be understood in a more cogent context. Fragments of a vessel that are to be glued together must match one another in the smallest details, although they need not be like one another. In the same way a translation, instead of imitating the sense of the original, must lovingly and in detail incorporate the original’s way of meaning, thus making both the original and the translation recognizable as fragments of a greater language, just as fragments are part of a vessel.38

Here, translation is likened to joining the shards of a porcelain vessel, and those shards to individual words. The fragments of a higher order of language correspond to pure language. Translation, with words as its material and guided by the principle of fidelity, is a process oriented towards the discovery of pure language.39 The existence of individual languages, the multiplicity of historical tongues, is not a negative factor in translation but rather, aids the search for the totality of meaning, namely pure language. Through this, the limitations of a particular language can be overcome. According to Benjamin, licence in translation is meaningful in so far as it advances “the interest of pure language.”40 Ultimately, the task of the translator is to liberate pure language from its confinement in the particular language in which the original is written.

Rather, freedom proves its worth in the interest of the pure language by its effect on its own language. It is the task of the translator to release in his own language that pure language which is exiled among alien tongues, to liberate the language imprisoned in a work in his re-creation of that work. For the sake of the pure language, he breaks through decayed barriers of his own language.41

To summarise, translation, for Benjamin, means the following: first, translation is a form in itself, not a vehicle for transmitting the information contained in the original. This is because a text possesses translatability whether or not it is ever translated. Second, the translatability guaranteed by “God’s remembrance” is grounded in the inherent kinship among languages. Third, translation is the search for pure language—the totality of mutually supplementing meanings—whose source is this kinship; it is a “longing for linguistic complementation”, a longing for pure language.42 Fourth, the basis of translation is formed by the principle of fidelity that concentrates on the word rather than the sentence. Fifth, translation is the act of liberating pure language from its confinement within a particular language.

Earlier, in discussing Benjamin’s theory of translation, I raised two questions concerning GNMT. First, has GNMT in fact discovered the “pure language” of which Benjamin speaks? Second, how does the relation between sentences and words play out in machine translation? What significance does GNMT’s shift from a phrase-level to a sentence-level model hold for translation theory? Ultimately, has the ‘Google translator’, rather than the human translator, fulfilled its task by means of the machine?

GNMT’s Interlingua and Benjamin’s Pure Language

The interlingua whose traces GNMT claims to have detected and the “pure language” advanced in Benjamin’s translation theory resonate across the hundred years that separate them. This resonance can be summarised in three propositions. First, with respect to the inherent relations among languages: in GNMT, semantically identical sentences are represented in much the same way within the vector space, regardless of language. In other words, different languages commonly possess “shared representations” produced through neural network based machine learning. Benjamin maintains that a suprahistorical, a priori kinship among languages is guaranteed by “God’s remembrance.” Second, with respect to the matter of an interlingua or, in Descartes’ words, “universal language”: GNMT shows that a machine constructed “shared representation,” rather than any human tongue, serves that role. Benjamin argues that, through the act of translation, pure language—the totality of intentions by which languages supplement one another—comes to light. Third, with respect to the translation process itself: GNMT, unlike earlier phrase-level systems, executes so-called “sequence-to-sequence translation” at the level of sentences rather than words. Benjamin argues that the primary element in translation is the word, not the sentence. Although this third proposition appears to set the two approaches at odds, GNMT’s sequence translation neither ignores the word as a unit nor treats the sentence as an indivisible whole. As noted above, GNMT reads each word of the source sentence in order and produces the target sentence word by word in the same fashion. In that sense, GNMT too regards the word as the fundamental unit of translation. The difference lies in emphasis: Benjamin’s theory emphasises the licence of sentence construction grounded in the principle of word-based fidelity, whereas GNMT employs a probabilistic word-by-word selection process.

| GNMT | Benjamin |

|---|---|

| Google Translate | Human translator |

| Multilingual | Unfamiliar languages |

| Interlingua | Pure language |

| Shared representation | Totality of intentions |

| Virtual relationships | Suprahistorical, a priori relationships: the kinship between languages |

| Machine learning (LSTM) | God’s remembrance |

| Technological, machinic | Creative |

| (Word →) Sentence | Word → Sentence |

Table 1. GNMT’s interlingua and Benjamin’s “pure language”

Of the three propositions, the central issue is the second: whether the “shared representation” detected by GNMT truly signals the presence of an interlingua, and whether it amounts to the “pure language” that, according to Benjamin, is the goal of translation. Interest in the link between machine translation and Benjamin has already been suggested, albeit not in detail. Julian Dibbell argued that Babelfish, the online translation system for AltaVista mentioned earlier, realises the pure language posited in Benjamin’s theory of translation.43 He even claims that “Benjamin in fact had something very much like Babelfish in mind.” Dibbell suggests that Benjamin’s pure language—a nobler, purer tongue that preceded Babel’s fragmentation—may reveal itself more clearly through machine translation than human translators. To test this, he reports an experiment with Babelfish using William Butler Yeats’ poem ‘When You Are Old’. He translated the English original into Portuguese, then back into English, and compared the original with the round-trip version to see what difference Babelfish could make. While withholding a final verdict, he concludes by posing a question: “[c]an we now judge whether Babelfish indeed reaches deeper into that space between languages[?]” He argues that while the answer is elusive, the history of machine translation seems to have “veered between the two extremes of license and literalism, seeking at its best a middling compromise.” This matches precisely with Benjamin’s call to reconcile the principle of fidelity with the principle of licence.

In the search for resonance between GNMT’s interlingua and Benjamin’s “pure language,” the final point to stress is that neither constitutes a human language such as English within the English-Korean and English-Japanese pairing. The reason is that the former refers to a shared representation, whereas the latter denotes the totality of semantic relations. In June 2017, reports circulated that Facebook might have discovered a machine language.44 This language, dubbed “facebooklish,” was discovered by chance while the company built a chatbot for negotiation; the bot had spontaneously invented a language similar to human language but incomprehensible to humans. Debate ensued over whether it could be called a language at all, yet one fact remained clear: it is a non-human or machine language, wholly distinct from GNMT’s interlingua and Benjamin’s “pure language.” In short, GNMT’s interlingua and Benjamin’s “pure language” should be viewed as ‘relational representations’ between languages. Put differently, what GNMT has discovered is a ‘virtual network’ akin to Benjamin’s “pure language.” Such virtual networks are actualised as concrete language through the act of translation.

Benjamin’s philosophy of translation sheds light on the nature of AI-based machine-translation systems such as GNMT and indicates what deserves our attention within them. GNMT, in turn, demonstrates how the discovery of pure language—the task Benjamin assigns to the translator—might be technologically embodied. Their interplay lets us discern a moment of resonance in the repetition of both material and conceptual patterns. Building on that resonance, technology criticism may well uncover further points of convergence between philosophy and advanced technology.

References

Battles, Matthew. “I After the Cloudy Doubly Beautifully.” Hilobrow, June 2, 2009. http://hilobrow.com/2009/06/02/i-after-the-cloudy-doubly-beautifully/.

Benjamin, Walter. “On Language as Such and on the Language of Man.” In Walter Benjamin: Selected Writings, Vol. 1: 1913-1926, edited by Marcus Bullock and Michael W. Jennings, 62–74. Cambridge, MA: The Belknap Press of Harvard University Press, 1996.

Benjamin, Walter. “The Task of the Translator.” In Walter Benjamin: Selected Writings, Vol. 1: 1913-1926, edited by Marcus Bullock and Michael W. Jennings, 253–263. Cambridge, MA: The Belknap Press of Harvard University Press, 1996.

Choi, Seongman. “Balter benyamin sasangui todae: eoneo-beonyeok-mimesis” [Foundation of Walter Benjamin’s thought: language-translation-mimesis]. In Balter benyamin seonjip 6: eoneo ilbangwa inganui eoneoe daehayeo, beonyeokjaui gwaje oe [Walter Benjamin selected works 6: On language as such and on the language of man, the task of the translator and others], translated by Choi Seongman, 5–57. Seoul: Doseo chulpan Gil, 2008.

Dibbell, Julian. “After Babelfish.” Feed online magazine, July 26, 2000. http://www.juliandibbell.com/texts/feed_babelfish.html.

Dupeau, Jeanne. “Attention Mechanism.” Heuritech (blog), Medium, December 5, 2019. https://medium.com/heuritech/attention-mechanism-5aba9a2d4727.

Johnson, Melvin, Mike Schuster, Quoc V. Le, Maxim Krikun, Yonghui Wu, Zhifeng Chen, Nikhil Thorat, et al. “Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation.” November 14, 2016. arXiv:1611.04558 [cs.CL].

Lafrance, Adrienne. “What an AI’s Non-Human Language Actually Looks Like.” The Atlantic, June 20, 2017. https://www.theatlantic.com/technology/archive/2017/06/what-an-ais-non-human-language-looks-like/530934/.

Nabugodi, Miriam. “Pure Language 2.0: Walter Benjamin’s Theory of Language and Translation Technology.” Feedback, May 19, 2014. https://openhumanitiespress.org/feedback/literature/pure-language-2-0-walter-benjamins-theory-of-language-and-translation-technology/.

Robinson, Andrew. “Walter Benjamin: Language and Translation.” Ceasefire Magazine, May 10, 2013. https://ceasefiremagazine.co.uk/walter-benjamin-language-translation/.

Schuster, Mike, Melvin Johnson, and Nikhil Thorat. “Zero-Shot Translation with Google’s Neural Machine Translation System.” Google AI Blog, November 22, 2016. https://ai.googleblog.com/2016/11/zero-shot-translation-with-googles.html.

Sutskever, Ilya, Oriol Vinyals, and Quoc V. Le. “Sequence to Sequence Learning with Neural Networks.” In Advances in Neural Information Processing Systems 27 (NIPS 2014): 3104–3112, 2014.

Wikipedia. “Machine Translation.” https://en.wikipedia.org/wiki/Machine_translation.

Wu, Yonghui, Mike Schuster, Zhifeng Chen, Quoc V. Le, Mohammad Norouzi, Wolfgang Macherey, Maxim Krikun, et al. “Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation.” September 26, 2016. arXiv:1609.08144 [cs.CL].

Notes

- Yonghui Wu et al., “Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation,” September 26, 2016, arXiv:1609.08144 ↩

- Melvin Johnson et al., “Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation,” November 14, 2016, arXiv:1611.04558. ↩

- Wikipedia, “Machine Translation,” https://en.wikipedia.org/wiki/Machine_translation. ↩

- Julian Dibbell, “After Babelfish,” Feed online magazine, July 26, 2000, http://www.juliandibbell.com/texts/feed_babelfish.html. ↩

- Wu et al., “Google’s Neural Machine Translation System.” ↩

- Regardless of how many words a sentence contains, the encoder sets the word count—referred to as cells—to a fixed value, for instance 1,000, when it converts the sentence into vector values. Ilya Sutskever, Oriol Vinyals, and Quoc V. Le, “Sequence to Sequence Learning with Neural Networks,” in Advances in Neural Information Processing Systems 27 (NIPS 2014): 3104–3112, 2014. Consequently, the sentence occupies a 1,000-dimensional space. ↩

- This is called the “attention mechanism,” and it applies the attention mechanism studied in cognitive science and neuroscience—especially in visual perception—to AI systems, and is regarded as a breakthrough technique in RNNs, particularly in recent LSTM models. It is used not only in natural-language processing (NLP) tasks such as translation but also in image-based object recognition, where it helps determine which parts of an image deserve focus. For a technical explanation of the attention mechanism, see Jeanne Dupeau, “Attention Mechanism,” Heuritech (blog), Medium, December 5, 2019, https://medium.com/heuritech/attention-mechanism-5aba9a2d4727. ↩

- Sutskever et al., “Sequence to Sequence Learning with Neural Networks.” ↩

- Wu et al., “Google’s Neural Machine Translation System.” ↩

- Sutskever et al., “Sequence to Sequence Learning with Neural Networks.” ↩

- Johnson et al., “Google’s Multilingual Neural Machine Translation System.” ↩

- No additional modification of GNMT is required for “zero-shot translation.” The base system can be used as is; one simply prepends a token that specifies the target language—for example, <2es> when the sentence is to be translated into Spanish—to the input string. ↩

- Mike Schuster, Melvin Johnson, and Nikhil Thorat, “Zero-Shot Translation with Google’s Neural Machine Translation System,” Google AI Blog, November 22, 2016, https://ai.googleblog.com/2016/11/zero-shot-translation-with-googles.html. ↩

- Johnson et al., “Google’s Multilingual Neural Machine Translation System.” ↩

- Schuster et al., “Zero-Shot Translation with Google’s Neural Machine Translation System.” Though not discussed here, the research team demonstrated that GNMT can translate not only by means of “zero-shot translation” but also sentences in which multiple languages are mixed (Johnson et al., “Google’s Multilingual Neural Machine Translation System”). ↩

- Johnson et al., “Google’s Multilingual Neural Machine Translation System.” ↩

- Schuster et al., “Zero-Shot Translation with Google’s Neural Machine Translation System.” ↩

- Schuster et al., “Zero-Shot Translation with Google’s Neural Machine Translation System.” ↩

- Walter Benjamin, “The Task of the Translator,” in Walter Benjamin: Selected Writings, Vol. 1: 1913-1926, ed. Marcus Bullock and Michael W. Jennings (Cambridge, MA: The Belknap Press of Harvard University Press, 1996), 253–263. ↩

- Benjamin, “The Task of the Translator,” 253. ↩

- Seongman Choi, “Balter benyamin sasangui todae: eoneo-beonyeok-mimesis” (Foundation of Walter Benjamin’s thought: language-translation-mimesis), in Balter benyamin seonjip 6: eoneo ilbangwa inganui eoneoe daehayeo, beonyeokjaui gwaje oe (Walter Benjamin selected works 6: On language as such and on the language of man, the task of the translator and others), trans. Choi Seongman (Seoul: Doseo chulpan Gil, 2008), 19.) ↩

- Benjamin, “The Task of the Translator,” 254. ↩

- Benjamin, “The Task of the Translator,” 254. ↩

- Benjamin, “The Task of the Translator,” 254. ↩

- Benjamin, “The Task of the Translator,” 255–256. ↩

- Benjamin, “The Task of the Translator,” 255. ↩

- Walter Benjamin, “On Language as Such and on the Language of Man,” in Walter Benjamin: Selected Writings, Vol. 1: 1913-1926, ed. Marcus Bullock and Michael W. Jennings (Cambridge, MA: The Belknap Press of Harvard University Press, 1996), 62–74. ↩

- Benjamin, “On Language as Such and on the Language of Man,” 70. ↩

- Benjamin, “The Task of the Translator.” ↩

- Benjamin, “The Task of the Translator,” 255. ↩

- Benjamin, “The Task of the Translator,” 256–257. ↩

- Benjamin, “The Task of the Translator,” 257. ↩

- Benjamin, “The Task of the Translator,” 258. ↩

- Benjamin, “The Task of the Translator,” 258. Benjamin states that translation is situated “midway between poetry and theory” (Benjamin, “The Task of the Translator,” 259). ↩

- Benjamin, “The Task of the Translator,” 259. ↩

- Benjamin, “The Task of the Translator,” 259. ↩

- Benjamin, “The Task of the Translator,” 260. ↩

- Benjamin, “The Task of the Translator,” 260. ↩

- In this regard, Benjamin says that the interlinear translation of the Scriptures constitutes the prototype or ideal of all translation (Benjamin, “The Task of the Translator,” 263). ↩

- Benjamin says that “(i)n this pure language—which no longer means or expresses anything but is, as expressionless and creative Word, that which is meant in all languages—all information, all sense, and all intention finally encounter a stratum in which they are destined to be extinguished” (Benjamin, “The Task of the Translator,” 261). He regards this disappearance of meaning as a “liberation” from language’s communicative, merely incidental aspect. In other words, language becomes pure only when it is no longer communicative. See also Miriam Nabugodi, “Pure Language 2.0: Walter Benjamin’s Theory of Language and Translation Technology,” Feedback, May 19, 2014; Andrew Robinson, “Walter Benjamin: Language and Translation,” Ceasefire Magazine, May 10, 2013. ↩

- Benjamin, “The Task of the Translator,” 261. ↩

- Benjamin, “The Task of the Translator,” 260. ↩

- Julian Dibbell, “After Babelfish.” Battles, citing Dibbell, likewise offered a brief exploration of the connection between machine translation and Benjamin (Matthew Battles, “I After the Cloudy Doubly Beautifully,” Hilobrow, June 2, 2009, http://hilobrow.com/2009/06/02/i-after-the-cloudy-doubly-beautifully/). ↩

- Adrienne Lafrance, “What an AI’s Non-Human Language Actually Looks Like,” The Atlantic, June 20, 2017, https://www.theatlantic.com/technology/archive/2017/06/what-an-ais-non-human-language-looks-like/530934/. ↩