Contemporary media is experienced, created, edited, remixed, organized and shared with software. This software includes stand-alone professional media design and management applications such as Photoshop, Illustrator, Flash, Dreamweaver, Final Cut, After Effects, Aperture, and Maya; consumer-level apps such as iPhoto, iMovie, or Picassa; tools for sharing, commenting, and editing provided by social media sites such as Facebook, YouTube, Video, and Photobucket, and the numerous media viewing, editing and sharing apps available on mobile platforms. To understand media today we need to understand media software – its genealogy (where it comes from), its anatomy (interfaces and operations), and its practical and theoretical effects.1 How does media authoring software shape the media being created, making some design choices seem natural and easy to execute, while hiding other design possibilities? How does media viewing / managing / remixing software affect our experience of media and the actions we perform on it? How does software change what “media” is conceptually?

This article approaches some of these questions via the analysis of a software application that has become synonymous with “digital media” – Adobe Photoshop. Like other professional programs for media authoring and editing, Photoshop’s menus contain many dozens of separate commands. If we consider that almost all the commands contain multiple options that allow each command do a number of different things, the complete number runs into thousands.

This multiplicity of operations offered in contemporary application software creates a challenge for Software Studies. If we are to understand how software applications shape our worlds and our imaginations (what people imagine they can do with software), we need some way of sorting all these operations into a fewer categories so we can start building a theory of application software. This can’t be achieved by simply following the top menu categories offered by applications. (For example, Photoshop CS4’s top menu includes File, Edit, Layer, Select, Filter, 3D, View, Window, and Help.) Since most applications include their own unique categories, our combined list will be too large. So we need to design a more general scheme.

This article starts by proposing two such possible schemes and then tests them by analyzing a subset of Photoshop’s commands which, in a certain sense, stand-in for this application in our cultural imaginary: Filters. In the last section, I also look at another key feature of Photoshop – Layers.

It is important to point out that the two proposed schemes are intended to serve only as provisional categories. They provide one possible set of directions – an equivalent of North, South, West and East for a map where we can locate multiple operations of media design software. Like any first sketch, no matter how imprecise, this map is useful because now we have something to modify as we go forward.

A Map of Media Software

My first scheme that we will test divides all software techniques for working with media into two types depending on which data types they can work on.

1a) The first type is media creation, manipulation, and access techniques that are specific to particular types of data. In other words, these techniques can be used only on a particular data type (or a particular kind of “media content”). I am going to refer to these techniques as media specific (the word “media” in this case stands for “data type”). For example, the technique of geometrical constraint satisfaction already available in the first graphical editor – Ivan Sutherland ’s Sketchpad (1961-1962) – can work on graphical data defined by points and lines. However, it would be meaningless to apply this technique to text. Another example: today image editing programs usually include various filters such as Blur and Sharpen that can operate on continuous tone images. But normally we would not be able to blur or sharpen a 3D model. We can go with such examples: it is would be as meaningless to try to “extrude” a text or “interpolate” it as to define a number of columns and tab stops for an image or a sound composition.

1b) The second type is new software techniques that can work with digital data in general (i.e. they are not specific to particular types of data). The examples are “view control,” hyperlinking, sort, search, and network protocols such as HTTP. They are general ways of manipulating data regardless of what this data encodes: pixel values, text characters, 3D shapes, etc. I will refer to these techniques as media independent. An example of such a technique is Douglass Englebart’s “view control” (mid 1960s) – the idea that the same information can be displayed in many different ways. This concept is now implemented in most media editors so it works with images, 3D models, video files, animation projects, graphic designs, sound compositions, and text. View control has also become part of modern OS (operating systems such as Mac OS X, Microsoft Windows, or Google Chrome OS). We use view control daily when we change the files “view” between “icons,” “list,” and “columns” (these are the names used in Mac OS X; other operating systems may use different names to refer to the same views). Media independent techniques also include interface commands such as cut, copy and paste. For instance, you can select a file name in a directory, a group of pixels in an image, or a set of polygons in a 3D model, and then cut, copy, and paste these objects.

My second scheme also divides software techniques for working with media data into two types, but it does this in a different way. What matters here are the relations between software techniques and pre-digital media technologies. (This is a scheme particularly relevant for media historians.)

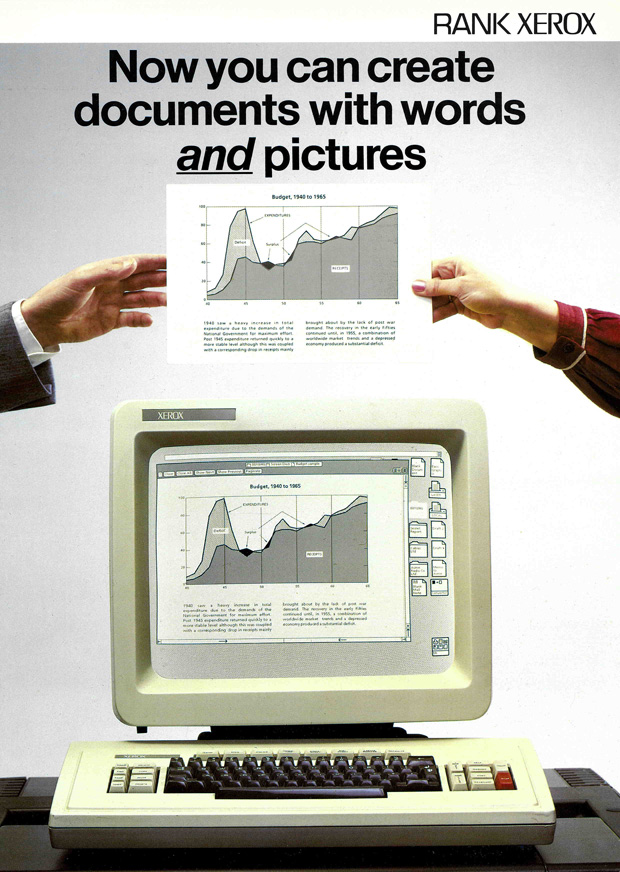

2a) The first type is simulations of prior physical media augmented with new properties and functions.2 When we use computers to simulate some process in the real world – the behavior of a weather system, the processing of information in the brain, the deformation of a car in a crash – our concern is to correctly model the features of this process or system. We want to be able to test how our model would behave in different conditions with different data, and the last thing we want to do is for the computer to introduce some new properties into the model that we ourselves did not specify. In short, when we use computers as a general-purpose medium for simulation, we want this medium to be completely “transparent.” But what happens when we simulate different media in a computer? In this case, the appearance of new properties may be welcome as they can extend the expressive and communication potential of these media. This idea was already clearly articulated at Xerox PARC in the first part of the 1970s – a place that more than any other is responsible for creating the category of software applications. When Alan Kay and his colleagues at PARC created computer simulations of existing physical media – i.e. the software tools for representing, creating, editing, and viewing these media – they “added” many new properties. Writing about their work in 1997, Kay and Adele Goldberg for instance point out that “It [the electronic book] need not be treated as a simulated paper book since this is a new medium with new properties. A dynamic search may be made for a particular context. The non-sequential nature of the file medium and the use of dynamic manipulation allows a story to have many accessible points of view.”3

2b) The second type is a number of new computational media that do not have any obvious equivalents in previous physical or electronic media. Here are a few examples of these “new media” listed with names of the people and/or places usually credited as their inventors: hypertext and hypermedia (Ted Nelson); interactive navigable 3D spaces (Ivan Sutherland), interactive multimedia (Architecture Machine Group’s “Aspen Movie Map”), Internet (Paul Baran and Lawrence Roberts), The World Wide Web (Tim Berners-Lee).

While for a media historian this scheme is quite meaningful, what about users who are “digital natives”? These software users may never have directly used any other media besides tablets or laptops, or mobile media devices (mobile phones, cameras, music players); and they are also likely to be unfamiliar with the details of 20th century cell animation, film editing equipment, or any other pre-digital media technology. Does this mean that the distinction between software simulations of previously existing media tools and new “born digital” media techniques has no meaning for digital natives but only matters for historians of media such as myself?

I think that while the semantics of this distinction (i.e. the reference to previous technologies and practices) may not be meaningful to digital natives, the distinction itself is something these users experience in practice. To understand why this is the case, let’s ask if all “born digital” media techniques available in media authoring software applications may have something in common – besides the fact that they did not exist before software.

One of the key uses of digital computers from the start was automation. As long as a process can be defined as a finite set of simple steps (i.e. as an algorithm), a computer can be programmed to execute these steps without human input. In the case of application software, the execution of any command involves “low-level” automation (since a computer automatically executes a sequence of steps of the algorithm behind the command). However, what it is important from a user point of view is the level of automation being offered in the command’s interface.

Many software techniques that simulate physical tools share a fundamental property with these tools: they require a user to control them “manually.” The user has to micro-manage the tool, so to speak, directing it step-by-step to produce the desired effect. For instance, you have to explicitly move the cursor in a desired pattern to produce a particular brushstroke using a brush tool; you also have to explicitly type every letter on a keyboard to produce a desired sentence. In contrast, many of the techniques that do not simulate anything that existed previously – at least, not in any obvious way – offer higher-level automation of creative processes. Rather than controlling every detail, a user specifies parameters and controls and sets the tool in motion. All generative (also called “procedural”) techniques available in media software fall into this category. For example, rather than having to create a rectangular grid made of thousands of lines by hand, a user can specify the width and the height of the grid and the size of one cell, and the program will generate the desired result. Another example of this higher-level automation is interpolation of key values offered by animation software. In a 20th century animation production, a key animator drew key frames which were then forwarded to human inbetweeners who created all the frames in between the key frames. Animation software automates the process of creating in-between drawings by automatically interpolating the values between the keyframes.

Thus, although users may not care that one software tool does something that was not possible before digital computers while another tool simulates previous physical or electronic media, the distinction itself between two types is something users experience in practice. The tools that belong to the first type showcase the ability of computers to automate processes; the tools that belong to the second type use invisible low-level automation behind the scenes while requiring users to direct them manually.

Filter > Stylize > Wind

Having established two sets of categories for software techniques (media independent vs. media specific; simulation of the old vs. new), let’s now test them against the Photoshop commands. As mentioned above, we will concentrate on filters – i.e. the set of commands that appear under the Filter menu. (Note that a large proportion of Photoshop filters are not unique to this program but are also available in other professional image editing, video editing and animation software – sometimes under different names. To avoid any possible misunderstanding, I will be referring to the Photoshop versions of these commands as implemented in Photoshop CS4, with their particular options and controls as defined in this software release.)4

The first thing that is easy to notice is that the names of many Photoshop filters refer to the techniques for image manipulation and creation and materials that were available before the development of media application software in the 1990s – painting, drawing and sketching, photography, glass, neon, photocopying. Each filter is given a set of explicit options that can be controlled with interactive sliders and/or by directly entering numerical values. These options make possible to control filter’s visual effects with a degree of precision that would be hard to achieve when using the corresponding physical tool.

This is a good example of my earlier point that simulations of prior physical media augment them with new properties. In this case, the new property is the explicit filter controls. These controls not only offer many options but also often allow you to set filter’s properties numerically by choosing a precise value from a range. For example, the Palette Knife filter offers three options: Stroke Size, Stroke Detail, and Softness. Stroke Size can take values between 1 and 50; the other two options have similarly large ranges. (At the same time, its important to note that expert users of many physical tools such as a paintbrush can also achieve many effects not possible in its software simulation. Thus, software simulations should not be thought of as linear improvements over previous media technologies.)

While some of these filters can be directly traced to previous physical and mechanical media such as oil painting and photography, others make a reference to actions or phenomena in the physical world that at first appear to have nothing to do with media. For instance, the Extrude filter generates a 3D set of blocks or pyramids and paints image parts on their faces, while the Wave filter creates the effect of ripples on the surface of an image.

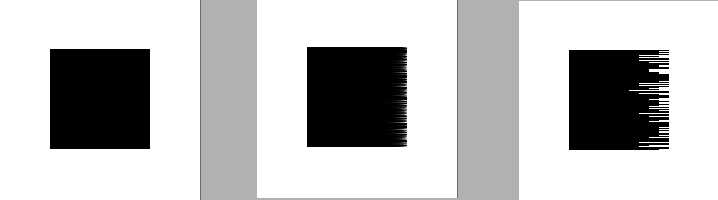

However, if we examine any of these filters in detail, we realize that things are not so simple. Let’s take the Wind filter (located under the Stylize submenu) as an example. This is how Photoshop CS4’s built-in Help describes this filter: “Places tiny horizontal lines in the image to create a windblown effect. Methods include Wind; Blast, for a more dramatic wind effect; and Stagger, which offsets the lines in the image.” We are all familiar with the visual effects of a strong wind on a physical environment (for instance, blowing through a tree or a field of grass) – but before you encountered this filter, you probably never imagined that you can “wind” an image. Shall we understand the name of this filter as a metaphor? Or perhaps, we can think of it as an example of a conceptual “blend” (which is how, according to Conceptual Blending theory,5 many concepts in natural languages get formed): “wind” plus “image” results in a new concept actualized in the operations of the Wind filter.

The situation is further complicated by the fact that the results of applying the Wind filter to an image look pretty different compared to what the actual wind does to a tree or a field of grass. However, they do look rather similar to a photograph of a real windy scene taken with a long exposure. Therefore, we can think of the name “Wind” both as a metaphor – to help us imagine what a particular algorithmic transformation does to an image – and as a simulation of a particular photographic technique (long exposure). In short, although its name points to the physical world, its actual operations may also refer to a pre-digital media technology.

Are there “Born Digital” Filters?

Let’s continue exploration of Photoshop filters. The great majority of the filters make references to previous physical media or our experiences in the physical world – at least in terms of how they are named. Only a few don’t. These filters include High-pass, Median, Reduce Noise, Sharpen, and Equalize. Are these filters “born digital”? In other words, did we finally get to pure examples of “new” media techniques (2b)? The answer is no. As it turns out, all these filters are also software simulations that refer to things that already existed before digital computers.

Although they represent a small subset of Photoshop’s extensive filter collection, these filters are central to all electronics, telecommunication and IT technologies. Moreover, they are not unique to processing digital images but can be used on any kind of data – sounds, television transmission, data captured by an environmental sensor, data captured by a medical imaging devices, etc.

In their Photoshop implementation, these filters work on continuous-tone images, but since they can be also applied to sound and other types of signals, they actually belong to our “media-independent” category (1a). In other words, they are general techniques developed first in engineering and later also in computer science for signal and information processing. The application of these techniques to images forms the part of the field of image processing defined as “any form of information processing for which the input is an image, such as photographs or frames of video.”6

Like these filters, many of the “new” techniques for media creation, editing, and analysis implemented in software applications were not developed specifically to work with media data.7 Rather, they were created for signal and information processing in general – and then were either directly carried over to, or adapted to work with media.

This is one of the most important theoretical dimensions in the shift from physical and mechanical media technologies to electronic media and then digital software. Previously, physical and mechanical media tools were used to create content which was directly accessible to human senses (with some notable exceptions like Morse code) – and therefore the possibilities of each tool and material were driven by what was meaningful to a particular human sense. A paintbrush could create brushstrokes that had color, thickness, and shape – properties directly speaking to human vision and touch. Similarly, the settings of photographic camera controls affected the sharpness and contrast of captured photos – characteristics meaningful to human vision. A different way to express this is to say that the “message” was not encoded in any way; it was created, stored, and accessed in its native form. So if we were to redraw the famous diagram of a communication system by Claude Shannon (1948)8 for the pre-electronics era, we would have to delete the encoding and decoding stages.

Media technologies based on electronics such as telegraph, telephone, radio, and television, and later digital computers use the coding of messages (or “content”.)

And this, in turn, makes possible the idea of information – a disembodied, abstract and universal dimension of any message separate from its content. Rather than operating on sounds, images, film, or text directly, electronic and digital devices operate on the continuous electronic signals or discrete numerical data. This allows for the definition of various operations that work on any signal or any set of numbers – regardless of what this signal or numbers may represent (images, video, student records, financial data, etc.). Examples of such operations are modulation, smoothing (i.e., reducing the differences in the data), and sharpening (exaggerating the differences). If the data is discrete, this allows for various additional operations such as sorting and searching.

The introduction of the coding stage allows for a new level of efficiency and speed in processing, transmitting and interacting with media data and communication content – and this is why first electronics and later, digital computers gradually replace all other media-specific tools and machines. Operations such as those just mentioned are now used to automatically process the signals and data in various ways – reducing the size of storage or bandwidth needed, improving quality of a signal to get rid of noise, and of course – perhaps most importantly – to send media data over communication networks.

The field of digital image processing began to develop in the second part of the 1950s when scientists and the military realized that digital computers can be used to automatically analyze and improve the quality of aerial and satellite imagery collected for military reconnaissance and space research. (Other early applications included character recognition and wire-photo standards conversion.)9 As a part of its development, the field took the basic filters that were already commonly used in electronics and adapted them to work with digital images. The Photoshop filters that automatically enhance image appearance (for instance, by boosting contrast, or by reducing noise) come directly from that period (late 1950s – early1960s).

In summary, Photoshop’s seemingly “born digital” (or “software-native”) filters have direct physical predecessors in analog filters.10 These analog filters were first implemented by the inventors of telephone, radio, telephone, electronic music instruments, and various other electronic media technologies during the first half of the 20th century. They were already widely used in the electronics industry and studied in the field of analog signal processing before they were adapted for digital image processing.

Filter > Distort > Wave

The challenges in deciding in what category to place Photoshop filters persist if we continue going through the Filter menu. The difficulties in deciding where to place this or that technique are directly related to the history of digital computers as simulation machines. Every element of computational media comes from some place outside of digital computers. This is true not only for a significant portion of media editing techniques – filters, digital paintbrushes and pencils, CAD tools, virtual musical instruments and keyboards, etc. – but also for the most basic computer operations such as sort and search, or basic ways to organize data such as a file or a database. Each of these operations and structures can, both conceptually and historically, be traced to previous physical or mechanical operations and to strategies of data, knowledge and memory management that were already in place before the 1940s. For example, computer “files“ and “folders” refer to their paper predecessors already standard in every office. The first commercial digital computers from IBM were marketed as faster equivalents of electro-mechanical calculators, tabulators, sorters and other office equipment for data processing that IBM had already been selling for decades. However, whenever some physical operations and structures were simulated in a computer, they were simultaneously enhanced and augmented. This process of transferring physical world properties into a computer while augmenting them continues today – think, for instance of the multi-touch interface popularized by iPhone (2007). Thus, while Alan Turing defined the digital computer as a general-purpose simulation machine, when we consider its subsequent development and use, it is more appropriate to think of a computer as a simulation-augmentation machine. The difficulty of deciding how to classify different media software techniques is a direct result of this paradigm that underlies the development of what we now call software applications from the very start (i.e., Sutherland’s Sketchpad, 1962-1963).

The shift from physical media tools and materials as algorithms designed to simulate the effects of these tools and materials has also another important consequence. As we saw, some Photoshop filters explicitly refer to previous artistic media; others make reference to diverse physical actions, effects, and objects (Twirl, Extrude, Wind, Diffuse Glow, Open Ripple, Glass, Wave, Grain, Patchwork, Pinch, and others.) But in both cases, by changing the values of the controls provided by each filter, we can vary its visual effect significantly on the familiar – unfamiliar dimension. We can use the same filter to achieve a look that may indeed appear to closely simulate the effect of the corresponding physical tool or physical phenomena – or a look which is completely different from both nature and older media and which can be only achieved though algorithmic manipulation of the pixels. What begins as a reference to a physical world outside of the computer if we use default settings can turn into something totally alien with a change in the value of a single parameter. In other words, many algorithms only simulate the effects of physical tools and machines, materials or physical world phenomena when used with particular parameter settings; when these settings are changed, they no longer functions as simulations.

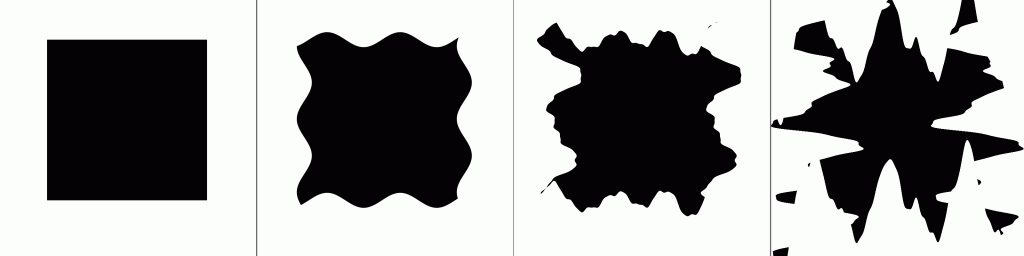

For an example, lets analyze the behavior of Wave filter (located under the Distort submenu). The filter refers to a familiar physical phenomena, and indeed, it can produce visual effects which we would confidently call “waves.” This does not mean that the effect of this filter has to closely resemble the literal meaning of wave defined by a dictionary as “a disturbance on the surface of a liquid body, as the sea or a lake, in the form of a moving ridge or swell.”11 In our everyday language, we use the word “wave” metaphorically to refer to any kind of periodical movement (“waving a hand”), or any static form that resembles the form of a wave, or a disturbance in the ordinary state of affairs (“making waves.”) According to an influential theory developed by cognitive linguist George Lakoff, such metaphorical use is not an exception, but the norm in human language and thinking. Lakoff proposed that the majority of our abstract concepts are metaphorical projections from sensorimotorial experiences with our own body and the surrounding physical world.12 “Making waves” and other metaphors derived from our perceptual experience of seeing real waves exemplify this general mechanism of language.

If we follow Lakoff ‘s theory of metaphor, some details of the Wave filter operation – along with many other Photoshop filters that refer to the physical world – can be understood as similar metaphorical projections. Depending on the choice of parameter values, this filter can either produce effects that closely resemble our perceptual experience of actual physical waves, or new effects that are related to such waves metaphorically.

The filter generates sine wave functions (y = sin x), adds them up and uses the result to distort an image. A user can control the number of sine waves via a parameter called Number of Generators. If this number is set to 1, the filter generates a single sine wave. Applying this single function to an image distorts it using a periodically varying pattern. In other words, the filter indeed generates an effect that looks like a wave.

However, at some point the metaphorical connection to real world waves breaks, and the use of Lakoff’s theory no longer helps. If we increase the number of generators (it can go up to 999), the pattern produced by the filter no longer appears to be periodic, and can therefore no longer can be related to real waves even metaphorically.

The reason for this filter behavior lies in its implementation. As we already explained, when the number of generators is set to 1, the algorithm generates a single sine function. If the option is to set to 2, the algorithm generates two functions; if it is set to 3, it generates three functions, and so on. The parameters of each function are selected randomly within the user specified range.

If we keep the number of generators small (2 – 5), sometimes these random values add up to a result that still resembles a wave; in other cases they do not. But when the number of functions is increased, the result of adding these separate functions with unique random parameters never looks like a wave.

Wave filter can create a practically endless variety of abstract patterns – and most of them are not periodic in an obvious way, i.e. they are no longer visually recognizable as “waves.” What they are is the result of a computer algorithm that uses mathematical formulas and operations (generating and adding sine functions) to create a vast space of visual possibilities. So although the filter is called “Wave,” only a tiny part of its space of possible patterns corresponds to the wave-like visual effects in the real world.

The same considerations apply to many other Photoshop filters that make references to physical media. Similar to a Wave filter, the filters gathered under the Artistic and Texture submenus produce very precise simulations of the visual effects of physical media with a particular range of parameter settings; but when the parameters are outside of this range, these filters generate a variety of abstract patterns.

The operation of these Photoshop filters has important theoretical consequences. Earlier I pointed out that the software tools that simulate physical instruments – paint brushes, pens, rulers, erasers, etc. – require manual control, while the tools that do not refer to any previous media offer higher level automation. A user sets the parameter values and the algorithm automatically creates the desired result.

The same “high-level” automation underlies so-called “generative (or “procedural”) software techniques common today. The work ranges from still and motion graphics to architecture and games – from live visuals and animations by software artist Lia13 to the massive procedurally generated worlds in the game Minecraft .14 Other generative projects use algorithms to automatically create complex shapes, animations, spatial forms, music, architectural plans, etc. (A good selection of interactive generative works and generative animations can be found at www.processing.org/exhibition/.) Since most artworks created with generative algorithms are abstract, artists and theorists like to oppose to them to software such as Photoshop and Painter that are widely used by commercial illustrators and photographers in the service of realism and figuration. Additionally, because these applications simulate older manual model of creation, they are also seen as less “new media specific” then generative software. The end result of both of these critiques is that software that simulate “old media” are thought to be conservative, while generative algorithms and artworks are presented as progressive because these are unique to “new media.” (When people claim that artworks that involve writing computer code qualify as “digital art” while artworks created using Photoshop or other media applications do not, they rehearse a version of the same argument.)

However, as they are implemented in media applications such as Photoshop, software techniques that simulate previous media and the software techniques that are explicitly procedural and use higher-level automation are part of the same continuum. As we saw with the Wave filter, the same algorithm can generate an abstract image or a realistic one. Similarly, particle systems algorithms are used by new media artists and motion graphics designers to generate abstract animations; the same algorithms are also widely used in film production to generate realistic-looking explosions, fireworks, flocks of birds and other physical natural phenomena. In another example, procedural techniques often used in architectural design to create abstract spatial structures are also used in video games to generate realistic 3D environments.

The History and Actions Menus

I started this discussion of Photoshop filters to test the usefulness of two schemes for classifying the seemingly endless variety of software techniques available in media software: 1) media independent vs. media specific techniques (first scheme); 2) the simulations of previous tools vs. techniques which do not explicitly simulate prior media (second scheme). The first scheme draws our attention to the fact that all media applications share some genes, so to speak, while also providing some techniques that can only work on particular data types. The second scheme is useful if we want to understand the software techniques in terms of their genealogy and their relation to previous physical, mechanical and electronic media.

Although the previous discussion highlighted the difficult borderline cases, in other cases the divisions are clear. For example, the Brushstrokes filter family in Photoshop clearly takes inspiration from earlier physical media tools, while Add Noise does not. The Copy and Paste commands are the examples of media independent techniques; Auto Contrast and Replace Color commands are the examples of media-specific techniques.

However, beyond these distinctions suggested by the two schemes I proposed, all software techniques for media creation, editing, and interaction also share some additional common traits that we have not discussed yet. Conceptually, these traits are different from common media independent techniques such as copy and paste. What are they?

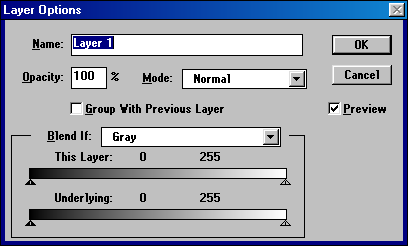

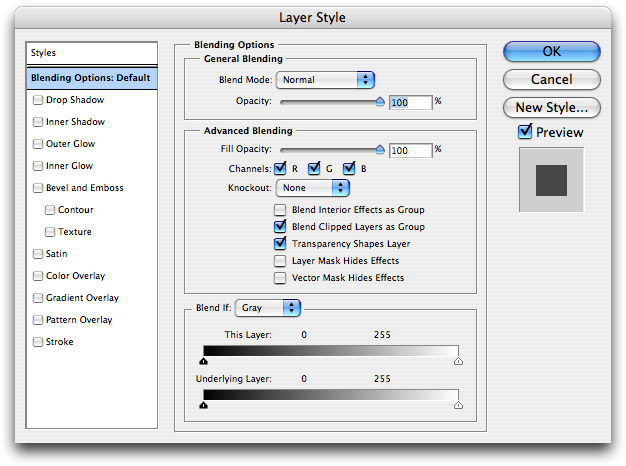

Regardless of whether they refer to some pre-existing instrument, action, or phenomena in the physical world or not, the media techniques available in application software are implemented as computer programs and functions. Consequently, they follow the principles of modern software engineering in general. Additionally, their interfaces use established conventions employed in all application software – regardless of whether these tools are part of spreadsheet software, inventory management software, financial analysis software, or web design software. They are given extensive numerical controls; their settings can be saved and retrieved later; their use is recorded in a History window so it can be recalled later; they can be used automatically by recording and playing Actions; and so on. (The terms “History palette” and “Actions” refer to Photoshop, but the concepts behind them are found in many other software applications.) In other words, they acquire the full functionality of modern software environment – the functionality that is significantly different from that of physical tools and machines that existed previously. Because of these shared implementation principles, all application software are like species that belong to the same evolutionary family, with media software occupying a branch of the tree.15

The pioneers of media software aimed to extend the properties of media technologies and tools they were simulating in a computer – in each case, as formulated by Kay and Goldberg, the goal was to create “a new medium with new properties.” Consequently, software techniques that refer to previous physical, mechanical, or electronic tools and creative processes are also “new media” because they behave so differently from their predecessors. We now have an additional reason to support this conclusion. New functionality (for instance, multiple zoom levels, the presence of media independent techniques (copy, paste, search, etc.) and standard interface conventions (such as numerical controls for every tool, the preview option, or commands history) further separate even the most “realistic” media simulation tool from its predecessors.

This means that to use any media authoring and editing software is to use “new media.” Or, to unfold this statement: all media techniques and tools available in software applications are “new media”- regardless of whether a particular technique or program refers to previous media, physical phenomena, or a common task that existed before it was turned into software, or not. To write using Microsoft Word is to use new media. To take pictures with a digital camera is to use new media. To apply the Photoshop Clouds filter (Filters > Render > Clouds) that uses a purely automatic algorithmic process to create a cloud-like texture is to use new media. To draw brushstrokes using the Photoshop brush tool is to use new media.

In other words, regardless of where a particular technique would fall in our classification schemes, all these techniques are instances of one type of technology – interactive application software. And, as Kay and Goldberg explained in their 1977 article quoted earlier, interactive software is qualitatively different from all previous media. Over the next thirty years, these differences became only larger. Interactivity, customization, the possibility to both simulate other media and information technologies and to define new ones, processing of vast amounts of information in real-time, control and interaction with other machines such as sensors, support of both distributed asynchronous and real-time collaboration – these and many other functionalities enabled by modern software (of course, working together with middleware, hardware, and networks) separate software from all previous media and information technologies and tools invented by humans.

The Layers Menu

For our final analysis, we will go outside the Filter menu and examine one of the key features of Photoshop that originally differentiated it from many “consumer” media editors – the Layers palette. The Layers feature was added to Photoshop 3.0, released in 1994.16 To quote Photoshop Help, “Layers allow you to work on one element of an image without disturbing the others.”17 From the point of view of media theory, however, the Layers feature is much more than that. It redefines both how images are created and what an “image” actually means. What used to be an indivisible whole becomes a composite of separate parts. This is both a theoretical point, and the reality of professional design and image editing in our software society. Any professional design created in Photoshop is likely to use multiple layers (in Photoshop CS4, a single image can have thousands of layers). Since each layer can always be made invisible, layers can also act as containers for elements that potentially may go into the composition; they can also hold different versions of these elements. A designer can control the transparency of each layer, group them together, change their order, etc.

Layers change how a designer or an illustrator thinks about images. Instead of working on a single design, with each change immediately (and in the case of physical media such as paint or ink, irreversibly) affecting this image, she now works with a collection of separate elements. She can play with these elements, deleting, creating, importing and modifying them, until she is satisfied with the final composition – or a set of possible compositions that can be defined using Layer Groups. And since the contents and the settings of all layers are saved in an image file, she can always come back to this image to generate new versions or to use its elements in new compositions.

The layers can also have other functions. To again quote Photoshop CS4’s online Help, “Sometimes layers don’t contain any apparent content. For example, an adjustment layer holds color or tonal adjustments that affect the layers below it. Rather than edit image pixels directly, you can edit an adjustment layer and leave the underlying pixels unchanged.”18 In other words, the layers may contain editing operations that can be turned on and off, and re-arranged in any order. An image is thus redefined as a provisional composite of both content elements and various modification operations that are conceptually separate from the elements.

We can compare this fundamental change in the concept and practice of image creation with a similar change that took place in mapping – a shift from paper maps to GIS (Geographical Information System). Just as all media professionals use Photoshop, today the majority of professional users that deal with physical spaces – city offices, utility companies, oil companies, marketers, hospital emergency teams, geologists and oceanographers, military and security agencies, police, etc. – use GIS systems. Consumer mapping software such as Google Maps, MapQuest and Google Earth can be thought of as very simplified GIS systems. They don’t offer the features that are crucial for professionals such as spatial analysis. (An example of spatial analysis is directing software to automatically determine the best positions of new supermarkets based on existing demographic, travel, and retail data.)

GIS “captures, stores, analyzes, manages, and presents data that is linked to location.”19 The central concept of GIS is a stack of data layers united by common spatial coordinates. There is an obvious conceptual connection to the use of layers in Photoshop and other media software applications – however, GIS systems work with any data that has geospatial coordinates rather than only images positioned on separate layers. The geospatial coordinates align different datasets together. As used by professionals, “maps” constructed with GIS software may contain hundreds or even thousands of layers. The layers representation is also used in consumer applications such as Google Earth. However, while in professional applications such as ArcGIS the user can create her own layered map from any data sources, in Google Earth users can only add their own data to the base representation of Earth which is provided by Google and can’t be modified.

In the GIS paradigm, space functions as a media platform which can hold all kinds of data types together – points, 2D outlines, maps, images, video, numerical data, text, links. (Other types of such media platforms commonly used today are databases, web pages, and spaces created via 3D compositing). In Photoshop the layers are still conceptually subordinated to the final image – when you are using the application, it continuously renders all visible layers together to show this image. So although you can use a Photoshop image as a kind of media database – a way to collect together different image elements – this is not the intended use (you are supposed to use separate programs such as Adobe Bridge or Aperture to do that). GIS takes the idea of a layered representation further. When professional users work with GIS application, they may never output a single map that would contain all the data. Instead, a user selects the data she needs to work with at that moment and then performs various operations on this data (practically this means selecting a subset of all data layers available). If a traditional map offers a fixed representation, GIS, as its name implies, is an information system: a way to manage and work with a large sets of separate data entities linked together – in this case, via a shared coordinate system.

From Programming Techniques to Digital Compositing

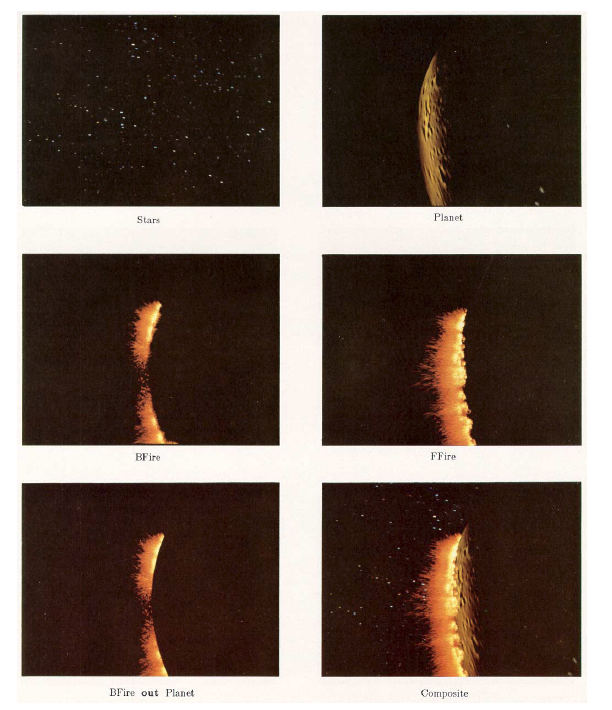

What is the conceptual origin of Layers in Photoshop? Where do Layers belong to in relation to my taxonomies of software-based media techniques? Thinking about various possible sources of this concept and also considering how it relates to other modern media editing techniques takes us in a number of different directions. First of all, layers is not specific to raster image editors such as Photoshop; this technique is also used in vector image editors (Illustrator), motion graphics and compositing software (After Effects), video editors (Final Cut), and sound editors (Pro Tools). In programs that work with time-based data – sound editors, animation and compositing programs, and video and film editors – layers are usually referred to as “channels” or “tracks”; these different terms point to particular physical and electronic media which a corresponding digital application has replaced (analog video switchers, multitrack audio recorders). Despite the difference in terms, the technique functions in the same way in all these applications: a final composition is a result of a “adding up” data (technically, a composite) stored in different layers/channels/tracks.

Photoshop Help explains Layers in the following way: “Photoshop layers are like sheets of stacked acetate. You can see through transparent areas of a layer to the layers below.”20 Although not explicitly named by this Help article, the reference here is to the standard technique of 20th century commercial animation – cell animation. Like a film camera mounted above the animation stand, Photoshop software is continuously “shooting” the image created through a juxtaposition of visual elements contained on separate layers.

It is not surprising that Photoshop Layers are closely related to 20th century visual media techniques such as cell animation, as well as to various practices of pre-digital compositing such as multiple exposure, background projection, mattes in filmmaking, and video keying.21 However, there is also a strong conceptual link between image Layers and 20th century music technology. The use of layers in media software to separate different elements of a visual and/or temporal composition strongly parallels the earlier practice of multitrack audio recording. The inventor of multitrack recording was the guitarist Les Paul; in 1953 he commissioned Ampex to built the first eight-track recorder. In the 1960s multi-track recorders were already being used by Frank Zappa, the Beach Boys and the Beatles; from that point on, multitrack recording became the standard practice for all music recording and arranging.22 Originally a bulky and very expensive machine, a multi-track recorder was eventually simulated in software and is now available in many applications. For instance, since 2004 Apple has included the multitrack recorder and editor GarageBand on all its new computers. Other popular software implementations include free application Audacity and professional-level Pro Tools.

Finally, yet another lead links Layers to a general principle of modern computer programming. In 1984 two computer scientists Thomas Porter and Thomas Duff working for ILM (Industrial Light and Magic, a special effects unit of Lucasfilm) formally defined the concept of digital compositing in a paper presented at SIGGRAPH.23 The concept emerged from the work ILM was doing on special effects scenes for 1982’s Star Trek II: The Wrath of Khan. The key idea was to render each separate element with a matte channel containing transparency information. This allowed the filmmakers to create each element separately and then later combine them into a photorealistic 3D scene.

Porter and Duff’s paper makes an analogy between creating a final scene by compositing 3D elements and assembling separate code modules into a complete computer program. As Porter and Duff explain, the experience of writing software in this way led them to consider using the same strategy for making images and animations. In both cases, the parts can be re-used to make new wholes:

Experience has taught us to break down large bodies of source code into separate modules in order to save compilation time. An error in one routine forces only the recompilation of its module and the relatively quick reloading of the entire program. Similarly, small errors in coloration or design in one object should not force “recompilation” of the entire image.24

The same idea of treating an image as a collection of elements that can be changed independently and re-assembled into new images is behind Photoshop Layers. Importantly, Photoshop was developed at the same place where the principles of digital compositing were defined earlier. The first version of the program was written by brothers Thomas and John Knoll when Thomas took a six-month leave from the PhD program at the University of Michigan in 1988 to join his brother who was then working at ILM.

This link between a popular software technique for image editing and a general principle of modern computer programming is very telling. It is a perfect example of how all elements of modern media software ecosystem – applications, file formats, interfaces, techniques, tools and algorithms used to create, view, edit, and share media content – have not just one but two parents, each with their own set of DNAs: media and cultural practices, on the one hand, and software development, on the other.

In short: through the work of many people, from Ivan Sutherland in early 1960s, to the teams at ILM, Macromedia, Adobe, Apple and other companies in the 1980s and 1990s, media becomes software – with all the theoretical and practical consequences such a transition entails. This article dives into Photoshop Filter and Layers menus to discuss some of these consequences – but more still remain to be uncovered.

References

Adobe, Photoshop Help, http://help.adobe.com/en_US/Photoshop/11.0/, accessed October 9, 2011.

Dictionary.com, “Wave. (n.d.),” Dictionary.com Unabridged (v 1.1). Retrieved December 29, 2007, from Dictionary.com website: http://dictionary.reference.com/browse/wave/.

Alan Kay and Adele Goldberg, “Personal Dynamic Media”, IEEE Computer. Vol. 10 No. 3 (March), 1977.

Lakoff, George & Mark Johnson (1980), Metaphors We Live By. Chicago: University of Chicago Press.

Lev Manovich, The Language of New Media (The MIT Press, 2001.)

Thomas Porter and Tom Duff, “Compositing Digital Images,” Computer Graphics vol. 18, no. 3 (July 1984): 253-259.

Lia, Liaworks, http://www.liaworks.com/category/theprojects/, accessed October 9, 2011.

Manovich, Lev. “Alan Kay’s Universal Media Machine,” in Arild Fetveit & Gitte Bang Stald, eds., Northern Lights 2006: Digital Aesthetics and Communication (University of Copenhagen, 2007).

Lev Manovich, Software Takes Command. The first draft November 2008, online at, http://www.softwarestudies.com/softbook/.

Azriel Rosenfeld, Picture Processing by Computer (New York: Academic Press, 1969).

C.E. Shannon, “A Mathematical Theory of Communication”, Bell System Technical Journal, vol. 27, pp. 379-423, 623-656, July, October, 1948.

Noah Wardrip-Fruin and Nick Montfort, eds., New Media Reader, (The MIT Press, 2003), 395. Emphasis mine – L.M.

Wikipedia, http://en.wikipedia.org/ accessed October 9, 2011.

Notes

- This is the goal of my book in progress Software Takes Command. The first draft was released online under Creative Commons license in November 2008 at http://www.softwarestudies.com/softbook/. ↩

- I discuss how pioneers of computational media systematically added new properties to their simulations of prior physical media in much more detail in Manovich, Lev. “Alan Kay’s Universal Media Machine,” in Arild Fetveit & Gitte Bang Stald, eds., Northern Lights 2006: Digital Aesthetics and Communication (University of Copenhagen, 2007). The summary offered here is extracted from this article. The article is available online at http://www.manovich.net/. ↩

- Alan Kay and Adele Goldberg, ‘Personal Dynamic Media’, IEEE Computer. Vol. 10 No. 3 (March), 1977. This quote is the reprint of this article in The New Media Reader, eds. Noah Wardrip-Fruin and Nick Montfort (The MIT Press, 2003), 395. Emphasis mine – L.M. ↩

- For a history of Photoshop versions releases, see http://en.wikipedia.org/wiki/Adobe_Photoshop_release_history/. ↩

- http://en.wikipedia.org/wiki/Conceptual_blending/, accessed October 9, 2011. ↩

- Ibid ↩

- Thus, development of software brings different media types closer together because the same techniques can be used on all of them. At the same time, “media” now share a relationship with all other information types, be they financial data, patient records, results of a scientific experiments, etc. ↩

- C.E. Shannon, “A Mathematical Theory of Communication”, Bell System Technical Journal, vol. 27, pp. 379-423, 623-656, July, October, 1948. ↩

- Even though image processing represents an active area of computer science, and is widely used in contemporary societies, I am not aware of any books or even articles that trace its history. A future historian would have to refer to the original publications in the field, such as its first textbook: Azriel Rosenfeld, Picture Processing by Computer (New York: Academic Press, 1969). ↩

- http://en.wikipedia.org/wiki/Analog_signal_processing/, accessed October 9, 2011. ↩

- wave. (n.d.). Dictionary.com Unabridged (v 1.1). Retrieved December 29, 2007, from Dictionary.com website: http://dictionary.reference.com/browse/wave/. ↩

- Lakoff, George & Mark Johnson (1980), Metaphors We Live By. Chicago: University of Chicago Press. ↩

- http://www.liaworks.com/category/theprojects/, accessed October 9, 2011. ↩

- http://en.wikipedia.org/wiki/Minecraft, accessed October 9, 2011. ↩

- Contemporary biology no longer uses the idea of evolutionary tree; the “species” concept has similarly proved to be problematic. Here I am using these terms only metaphorically. ↩

- http://en.wikipedia.org/wiki/Adobe_Photoshop_release_history, accessed October 9, 2011. ↩

- http://help.adobe.com/en_US/Photoshop/11.0/, accessed October 9, 2011. ↩

- http://help.adobe.com/en_US/Photoshop/11.0/, accessed October 9, 2011. ↩

- http://en.wikipedia.org/wiki/GIS/, accessed accessed October 9, 2011. ↩

- Photoshop CS4 Help, “About layers.” http://help.adobe.com/en_US/Photoshop/11.0/, accessed October 9, 2011. ↩

- The chapter “Compositing” in The Language of New Media presents an “archeology” of digital compositing that discusses the links between these earlier technologies. Lev Manovich, The Language of New Media (The MIT Press, 2001.) ↩

- See http://en.wikipedia.org/wiki/Multitrack_tape_recorder/ and http://en.wikipedia.org/wiki/History_of_multitrack_recording/, accessed October 9, 2011. ↩

- Thomas Porter and Tom Duff, “Compositing Digital Images,” Computer Graphics vol. 18, no. 3 (July 1984): 253-259. ↩

- Ibid ↩